Sleeping Safely in Thread Pools

A thread pool is a collection of worker threads that efficiently execute asynchronous callbacks on behalf of the application. The thread pool is primarily used to reduce the number of application threads and provide management of the worker threads. Applications can queue work items, associate work with waitable handles, automatically queue based on a timer, and bind with I/O. – MSDN | Thread Pools The red team community has developed a general awareness of the utility of thread pools for process injection on Windows. Safebreach published detailed research last year describing how they may be used for remote process injection. White Knight Labs teaches the relevant techniques in our Offensive Development training course. This blog post, however, discusses another use of thread pools that is relevant to red teamers: their use as an alternative to a sleeping main thread in a C2 agent or other post-exploitation offensive capability. This technique has been observed in use by real world threat actors before and is not a novel technique developed by myself or White Knight Labs. However, since we have not observed public discussion of the technique in red team communities, we have determined it to be a worthwhile topic that deserves more awareness. Let us now compare the standard technique of using a sleeping thread with this alternative option. The Problem with Sleeping Threads C2 developers often face a dilemma where their agent must be protected while it is sleeping. It sleeps because it awaits new work. While the agent sleeps, all sorts of protections have been constructed to ward off dangerous memory scanners that may hunt it in its repose. Many of those mechanisms protect its memory, such as encryption of memory artifacts or ensuring the memory storage locations fly innocuous flags. Today we do not speak of memory protections, rather of threads and their call stacks. Specifically, we are concerned about reducing the signature of our threads. The concern for which C2 developers delve into the complexities of call stack evasion is that their agent must periodically sleep its main thread. That main thread’s call stack may include suspicious addresses indicating how it came to be run. For example, code in some unbacked memory such as dynamically allocated shellcode may have hidden the C2 agent in safely image-backed memory before executing it. But the thread that ran that agent could still keep a call stack that includes an address in unbacked memory. Therefore the call stack must be “cleaned” in some way. Using thread pools to periodically run functionality instead of a sleeping main thread avoids this issue. By creating a timer-queue timer (which uses thread pools to run a callback function on a timer), the main thread can allow itself to die safe in the knowledge that its mission of executing work will be taken up by the thread pool. Once the sleep period is completed, the thread pool will create a new thread and run whatever callback function it was setup for. This would likely be the “heartbeat” function that checks for new work. The thread pool will automatically create a new thread with a clean call stack or handoff the execution to an existing worker thread. Comparing the Code Let us suppose we have a simple, mock C2 agent that includes the following simplified code: This part of our code is common between our two case studies. We have a heartbeat function that is called periodically after SLEEP_PERIOD amount of milliseconds. The heartbeat function checks for new work from the C2 server, executes it, and then sets up any obfuscation before it sleeps again. We will use MDSec’s BeaconHunter and Process Hacker to inspect our process after using both techniques. BeaconHunter monitors for processes with threads in the Wait:DelayExecution state, tracks their behavior, and calculates a detection score that estimates how likely they are to be a beacon. It was designed to demonstrate detection of Cobalt Strike’s implant BEACON. Sleeping Thread Example Now suppose our agent uses a sleeping thread to wait. The simplified code would look something like this: That is the code that runs in our hypothetical C2 implant’s main thread. All it does is sleep and then run the heartbeat function once it wakes. Now we’ll run our mock C2 agent and inspect it. With Process Hacker you can see that the main thread spends most of its time sleeping in the Wait:DelayExecution state. Now let’s take a look at what BeaconHunter thinks about us: BeaconHunter has observed our main sleeping thread, as well as our callbacks on the network, and decided that we are worthy of a higher score for suspicious behavior. Thread Pools Timer Example Now let’s try rewriting our mock C2 implant to use a thread pool and timer instead. In this example we use CreateTimerQueueTimer to create a timer-queue timer. When the timer expires every SLEEP_PERIOD milliseconds our callback function ticktock will be executed by a thread pool worker thread. Once we have setup the timer, we exit our original thread to allow the timer to take over management of executing our heartbeat function. Another option would be to trigger the callback on some kind of event rather than a timer. For that, you may use the RegisterWaitForSingleObject function. Now that we have re-configured our mock C2 implant to use a thread pool, let’s inspect our process again with Process Hacker: This screenshot contains several interesting bits of information. Because the waiting state of our worker thread is not Wait:DelayExecution, BeaconHunter does not notice our process at all and it is absent in the list of possible beacons: Which Thread Pool APIs Should You Use? If you read the MSDN article linked at the top, then you will know that there are two documented APIs for thread pools. A “legacy” API and a “new” API. The legacy API was re-architected in Windows Vista before the new architecture thread pools were implemented entirely in usermode. Now they are managed in the kernel by the TpWorkerFactory object type and are

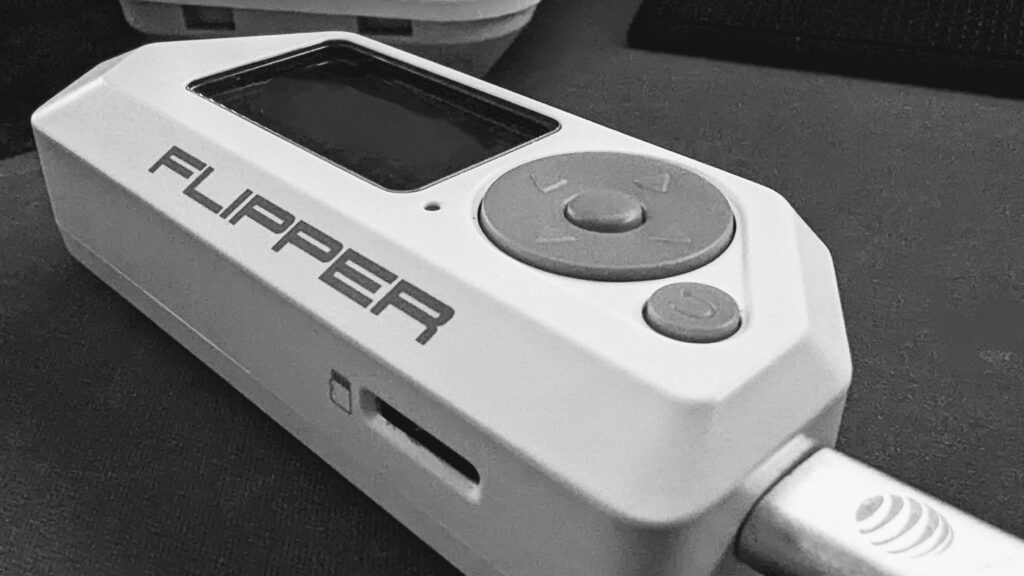

Flipper Zero and 433MHz Hacking – Part 1

What is the Flipper Zero? The Flipper Zero can best be described as a hardware hacking multi-tool. The Flipper Zero is an open-sourced hardware, hand-held device. The ability to explore and access RFID, the 433 Mhz spectrum, GPIO pins, UART, USB, NFC, infrared, and more is self-contained in a portable “to-go” device. What is the 433 MHz spectrum? Different countries set aside different parts of the radio spectrum that may be used by low-power devices (LPD) for license-free radio transmission. UHF 433.050 to 434.790 MHz is set aside in the United States for this purpose. This enables things like garage door openers, home security systems, and other short-range radio-controlled devices to work. In the United States, the Federal Communication Commission (FCC) is the government agency responsible for regulating and enforcing laws around the use of the electromagnetic spectrum. Minus a few exceptions, United States 47 CFR 15.201 defines and requires “intentional radiators” to be certified, and 47 CFR 15.240 outlines requirements for such devices. 433 MHz Recon (T1596, T1592.001) Because “intentional radiators” operating in the 433 – 434 MHz spectrum must be certified, various certification records are publicly available via the FCC’s website. If you look at virtually any electronic device, usually imprinted in plastic or on a label, you will find a string that starts with FCC ID: followed by an ID number. The image on the left shows the FCC ID for a security system’s keypad. The FCC has an online “Equipment Authorization Search” site, making it fairly simple to look up FCC IDs. Click to increase size If you have never used this site before, the dash symbol ( – ) must be prepended to the Product Code, at the time of this writing. If omitted, the search will likely fail. Once the search results load, you will see something like this: Here we can see the application history and the frequency range this device is certified to operate within. Clicking on “Details” presents us with several documents that can be very useful for OSINT/Recon activities. By law, manufacturers are allowed to keep certain details confidential, so it is not unusual to see a “Confidentiality” letter attached to the application. The Confidentiality letter outlines what was withheld and, in this case, it looks like schematics, block diagrams, and operational descriptions were all withheld. The “Internal Photos” is a great resource for providing an overview of what the printed circuit board (PCB) looks like and what (if any) debug taps may exist. It also provides indirect clues on how the device may be assembled and, thus, how it may be disassembled, thereby reducing the risk of “breaking” the device before you get to test it. If you don’t already have it, the “User Manual” can be a great resource. Having the user manual on hand can be very helpful. You can often find default credentials, Admin IP addresses, and other useful things in it. The “test reports” are often a wealth of information. Sometimes we can learn what modulation is being used, in this case Frequency Shift Keying (FSK), as well as the bandwidth and other useful details. In some cases, you may also find FCC IDs for other devices the product may contain (IoT inception). Below is the base station the example keypad connects to. If you are having difficulties locating a chip or component, the test report may contain references, model/part numbers, or other clues to help you out. At this point, it becomes an iterative process of finding the FCC ID, looking it up, going through the documents, and repeating as necessary. Capturing some data! So far, we have done our homework. We have learned this keypad communicates on 433.92, the device uses FSK, and the expected bandwidth will be around 37.074 KHz. Image Credit: https://docs.flipperzero.one/sub-ghz/read The Flipper Zero comes with a frequency analyzer. To access it, go to Main Menu > Sub-GHz > Frequency Analyzer. With the Flipper Zero close to the keypad, perform an action that instantiates a wireless signal. Sure enough, when we test the keypad in the above example, we get a value (+/-) of 433.92. To capture data we need to go to the “Read Raw” menu. Main Menu > Sub-GHz > Read Raw Image Credit: https://docs.flipperzero.one/sub-ghz/read-raw We need to enter the config menu and make sure the Frequency is set to 433.92. The Flipper Zero supports two FSK modes: For now, let’s select FM238 and let’s set the RSSI threshold to -70.0. With the configuration set, we are now ready to capture! When we replay this, the base station responds with an error/warning “Wireless Interference Detected.” The device will likely use some checks to prevent direct replay attacks. A common method is to use rolling codes. In the next chapter, we will dive more into how we can decode and begin to make sense of what is happening behind the scenes.

Security & Risk Assessment: Boblov KJ21

I was recently browsing a large online retailer and came across this headline for a product: BOBLOV KJ21 Body Camera, 1296P Body Wearable Camera Support Memory Expand Max 128G 8-10Hours Recording Police Body Camera Lightweight and Portable Easy to Operate Clear Night Vision … (emphasis added) As a former police officer, now a security researcher with a keen interest in IoT targets, I was intrigued by “Boblov” – a name I had never encountered. I conducted some open-source intelligence (OSINT) research. I discovered from multiple sources, including the UK’s Intellectual Property Office and Boblov’s “About Us” page, that Boblov seems to be a brand under Shenzhen Lvyouyou Technology Co. Ltd. However, a preliminary search yielded little information about Shenzhen Lvyouyou Technology Co. Ltd. The Boblov brand has a website at Boblov.com, which as of this writing, the domain register was listed as “Alibaba Cloud Computing (Beijing) Co., Ltd.” Boblov’s product page for the KJ21, and their Facebook page (BOBLOVGlobal), openly advertise their range of products as “Police” body cameras. A particular Facebook post (left image, click for larger view) showcases the KJ21, accompanied by hashtags “police” and “bodycamera.” On initial viewing, the imagery might strike someone as somewhat “tactical” or “law enforcement” oriented. However, upon closer examination of the video, it became evident that there was something off about this impression. By pausing and zooming in on the footage, it became clear that the person featured wasn’t law enforcement but a private Bail Bonds agent, often colloquially known as a “bounty hunter.” While such agents provide an essential service for the companies they work for, they are not “police” or state agents. In another striking example of Boblov seemingly failing to comprehend the market they are presumably targeting, this Facebook post (right image, click for larger view), at a superficial glance, might seem to feature someone who could pass for an official. However, the absence of identifiers such as a badge, name tag, and patches, along with the context of the photo, clearly indicates that this individual is not operating in an official law enforcement capacity. Nevertheless, Boblov’s caption of “Hero” coupled with the hashtag “#lawenforcement” comes across as perplexing. By this point, I had discovered that Boblov, a brand owned by a Chinese entity with no significant online presence, was advertising “Police Body Cameras.” However, their marketing team seemed to struggle to locate actual law enforcement officers to demonstrate their product. Alternatively, it appeared that this company, which marketed and sold “law enforcement” products in the US, did not fully comprehend the definition and composition of law enforcement. As I dug further, I came across a customer asking for help because they could not reset their password to get into their device. Boblov’s answer: “We could send you the universal password….” Click for larger Excellent. I’m sold. I got myself a KJ21. Let the fun begin. Assessment Purpose and Conditions The security and risk assessment on the Boblov KJ21, referred to hereafter as “the target,” was a “black box” examination. The only information used was that which could be obtained through open sources. This risk and security assessment aims to produce a structured qualitative evaluation of the target that can assist others in making informed decisions about risk. Notably, due to pre-engagement research that repeatedly suggested that the target is suitable for law enforcement use or is already employed in a security or law enforcement setting, the adopted information security standards and controls are reflective of this operational environment. Various tables at the end of this report provide further definitions and context. Scope The scope of the assessment assessed the effectiveness of the target’s controls to eliminate or mitigate external or internal threats from exploiting vulnerabilities. Should the target’s control fail, the result could be: Boblov KJ21: Target Information The Boblov K21 (“the target”) is a compact device with a rounded rectangular shape, measuring 2.95 x 2.17 x 0.98 inches and weighing approximately 14.5 ounces. The target has a USB port and a TransFlash (TF) Card slot for connectivity and storage. The camera is on the “front” side of the device, while the “rear” side features an LCD screen. Below the screen are four control buttons for reviewing video and audio content, viewing pictures, and adjusting settings. In addition, a reset button is tucked away, accessible with a small paperclip or similar pin-like objects. The device is held together by four screws – two on the front and two on the back – hidden beneath easily removable rubber plugs. You can use an unfolded paperclip to dislodge these plugs. Although a precision screwdriver kit would be handy for unscrewing, once the screws are out, the KJ21 can be conveniently opened. The following items were identified on the circuit board: #1 XT25F32B-S Quad IO Serial NOR Flash #2 WST 8339P-4 649395188A2231 #3 TF Card Housing #4 ETA40543 A283I (1.2A/16V Fully Integrated Linear Charger for 1 Cell 4.35V Li-ion Battery) #5 USB Input Click for larger XT25F32B-S Quad IO Serial NOR Flash As of this writing, the current version 1.3 of flashrom does not support the XT25F32B. Using the datasheet, I managed to customize flashrom and get it working. I provide the code you can add to the flashrom source and rebuild if desired. Though the contents could ultimately be dumped, this ultimately proved unnecessary. Click for larger TF Card Storage The target formats the TF Card using FAT32. At the root level, there are three main objects: The TF Card is not encrypted when the device password is set and enabled. Because of this, removing the card and mounting it as just a regular TF Card allows full control of the card’s contents. Device Password By default, the target’s password is disabled. When enabled, the default password is 000000. The operator can change the target’s password to any 0-9A-Z string that is six characters long. The effect of setting a password: During the assessment, the “universal” password was discovered to be: 888888 Target USB Computer Connection When

“Can’t Stop the Phish” – Tips for Warming Up Your Email Domain Right

Introduction Phishing continues to be a lucrative vector for adversaries year after year. In 2022, for intrusions observed by Mandiant, phishing was the second most utilized vector for initial access. When Red Teaming against mature organizations with up-to-date and well configured external attack surfaces, phishing is often the method of initial access. To give the readers a recent example, I was on an engagement where one of the goals was to bypass MFA and compromise a user’s Office365 account. The target had implemented geo-location based restrictions, MFA for users, and conducted user awareness trainings. To get past these controls, a customized version of Evilginx was hosted in the same country as the target. This server was protected behind an Apache reverse proxy and Azure CDN to get through the corporate web proxy. The phishing email contained a PDF, formatted to the target’s branding (from OSINT) with a ruse that required users to re-authenticate to their Office365 accounts as part of a cloud migration. The phish was sent using Office365 with an Azure tenant, which helped get through the email security solution (more on this later). The campaign began, and eventually a user’s session was successfully stolen, resulting in access to business critical data. Interestingly, although a few users reported the phish, the investigating security analyst did not identify anything malicious about the email and marked the reported phish as a false positive. The engagement concluded with valuable lessons learnt from identifying gaps in response processes, user awareness, and an ‘aha moment’ for the executives. Reel It In: Landing The Phish Landing an email in a recipient’s inbox can be a challenge, for both cold emailing and spear phishing. To avoid getting caught in spam filters, it’s important to follow a few best practices. Never use typosquatted target domains. For instance, Mimecast has an ‘Impersonation rule’ to prevent typosquatted domains from landing in an inbox. Instead, purchase an expired domain that resembles a vendor that may be related to the target. For instance, ‘fortinetglobal.com’ or ‘contoso.crowdstrikeglobal.com’. I tend to rely on Microsoft’s domains. Azure tenants provide a subdomain of ‘onmicrosoft.com’. Alternatively, you could rely on Outlook (‘@outlook.com’), and impersonate a specific user from the target, such as IT support. Rely on your OSINT skills to identify the email naming syntax, signature, and profile picture of the user you want to impersonate. Most RT shops use reputed providers such as Office365, Mailgun, Outlook, Amazon SES, or Google Workspace. Some folks still use their own Postfix SMTP server. To avoid blacklisted source IPs, you may want to use a VPN on the host you use to send emails. Enumerate the target’s DNS TXT records to identify allowed third-party marketing providers. A quick Shodan lookup of the allowed IPs should identify the associated vendor. Rely on the same service to send your phish, for example, Sendgrid or Klaviyo. Sender policy framework (SPF): Identifies the mail servers and domains that are allowed to send email on behalf of your domain. DomainKeys identified mail (DKIM): Digitally sign emails using a cryptographic signature—so recipients can verify that an email was sent by your domain and not spoofed by a third party. Domain-based message authentication (DMARC): Allows email senders to specify what should happen if an email fails the SPF record check —for example, whether it should be rejected or delivered to the recipient’s inbox. Words such as Free, Prize, Urgent, Giveaway, Click Here, Discount etc. are associated with spam or unsolicited email and cause your email to be automatically sent to Junk or not delivered at all. I prefer to send my phishes in three stages. For instance, on a Friday I’d send a ‘Downtime Notification’ informing users that they may need to re-authenticate to their accounts after a scheduled maintenance activity. This email would not contain anything suspicious—no links, no action required—it’s only informational. The following Monday, I’d reply to the same email thread, with the phish requesting users authenticate on the provided link. On Tuesday, I’d send a ‘Gentle Reminder’ email, once again replying to the same mail thread. The user compromised from the example in the introduction section was phished with the ‘Gentle Reminder’. For a targeted attack, it’s recommended to establish two-way communication with the user before sending the main phish. With Google Workspace: Create a document themed according to target’s branding → Embed payload URL within sheet → Add victim as ‘Commentator’ → Victim receives an email from ‘comments-noreply@docs.google.com’ With SharePoint: Create a folder or document themed according to target’s branding → Embed payload URL within sheet → Provide only read access to anyone with the URL → Share the folder\file with victim’s email ID → Embedded link is a subdomain of ‘sharepoint.com’. Personally, I’ve had better success landing shared files instead of shared folders on SharePoint. SharePoint also provides notifications when users access the document. Instead, provide a link in the email, redirecting the user to the site that’s hosting the payload. Using your own website provides benefits such as user\bot tracking and quick payload swaps. Use JavaScript to hide the payload from the <a href=””> tag, and download based on an event such as onclick(). Alternatively, Azure blob storage, Azure app services, AWS S3 buckets or IPFS are worth considering. Use ‘https://www.mail-tester.com’ and Azure’s message header analyzer. Note that just because you get a 10/10 score, does not mean you will land the phish in your target’s inbox. This brings us to the idea of email sender reputation. Warm It Up Email sender reputation refers to the perceived trustworthiness of the sender of an email, as determined by various email service providers and spam filters. This reputation is based on a number of factors such as the sender’s email address, the content of the email, and the frequency and consistency of sending emails. When new senders appear, they’re treated more suspiciously than senders with a previously-established history of sending email messages (think of it as a probation period). For instance, if you try to send more than 20 emails