In the first part of this series, we explored the methodology to identify vulnerable drivers and understand how they can expose weaknesses within Windows. That foundation gave us the tools to recognize potential entry points. In this next stage, we will dive into the techniques for loading those drivers in a stealthy way, focusing on how to integrate them into the system without triggering alarms or leaving obvious traces. This chapter continues building on the research path, moving from discovery to discreet execution.

The .sys file and normal loading

A Windows driver is usually a .sys file, which is just a Portable Executable (PE) like an .exe or .dll, but designed to run in Kernel Mode. It contains code sections, data, and a main entry point called DriverEntry, executed when the system loads the driver.

Drivers are normally installed with an .inf file, which tells Windows how to set them up. During installation, the system creates a corresponding entry in the Registry under:

HKLM\\SYSTEM\\CurrentControlSet\\Services\\<DriverName>

This entry defines the location of the .sys file (typically in System32\drivers), and when it should start (boot, system, or on demand).

How an EDR detects malicious driver loads and the telemetry involved

Drivers in Windows operate in kernel mode, which grants them the highest level of privileges on the system. This makes them a prime target for attackers looking to hide processes, escalate privileges, or bypass security defenses. One of the most common tactics seen in advanced attacks is the loading of malicious or vulnerable drivers, a technique that allows adversaries to gain control at the deepest layer of the operating system.

To counter this, an EDR solution continuously monitors system activity, gathering telemetry that helps uncover suspicious driver behavior. Detection is not based on a single signal, but on the correlation of multiple events, such as process activity, registry modifications, certificate validation, and kernel-level actions.

Malicious drivers are usually introduced in a few key ways. Attackers may attempt to load unsigned drivers or use stolen and revoked certificates to trick the system into accepting them. Another common approach is known as Bring Your Own Vulnerable Driver (BYOVD), where a legitimate but flawed driver is installed and then exploited to run arbitrary code in kernel space. Drivers can also be manually loaded using system tools or APIs like NtLoadDriver, sometimes disguised as administrative tasks.

Because of these attack vectors, EDR platforms pay close attention to four core areas of telemetry:

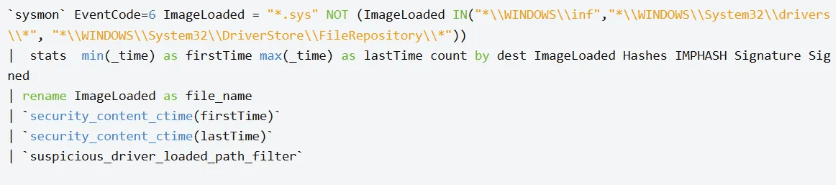

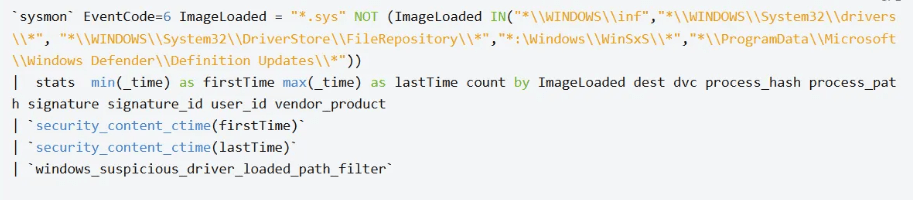

- System events: Logs that show when drivers are loaded, installed, or modified (for example, Sysmon Event ID 6 for driver load events).

- Image Load notifications: EDR driver registers for image loads, which includes drivers (with PsSetLoadImageNotifyRoutine).

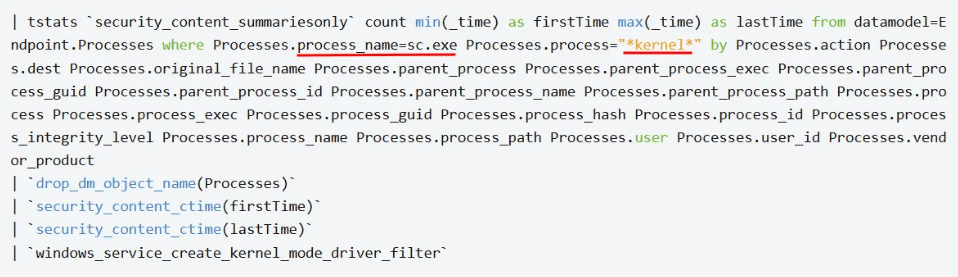

- Process and service monitoring: Detection of new kernel-level services, unexpected calls to driver-loading APIs, or unusual use of utilities like sc.exe or drvload.exe.

- Digital signature validation: Checking whether the driver is properly signed, and flagging issues such as missing signatures, revoked certificates, or suspicious publishers.

By gathering and correlating these signals, an EDR can quickly spot when a driver does not behave like a legitimate one, raising an alert before the attacker gains full control of the system.

Detection rules

Let’s start by looking at some of the most well-known detection rules used to identify malicious drivers.

The previously presented rules flag driver loads originating from atypical file paths. This heuristic is trivial to circumvent: an adversary can install the driver under a standard system directory (for example, C:\\Windows\\System32\\drivers), where simple path-based detections will likely fail.

This is easy to fix, and even if that specific alert didn’t fire, an EDR tracks every driver loaded on the system. Dropping our drivers into a normal path won’t make us magically stealthy.

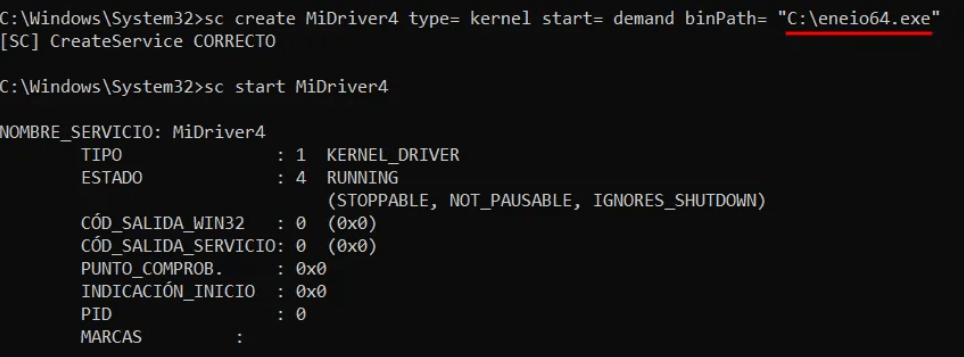

Both rules rely on the .sys file extension as an indicator of driver files. Consequently, using an alternative extension (for example, .exe) would bypass those specific checks. However, can a driver actually be loaded from a file whose extension is not .sys?

Indeed, it is possible to load a driver using a file that does not have a .sys extension.

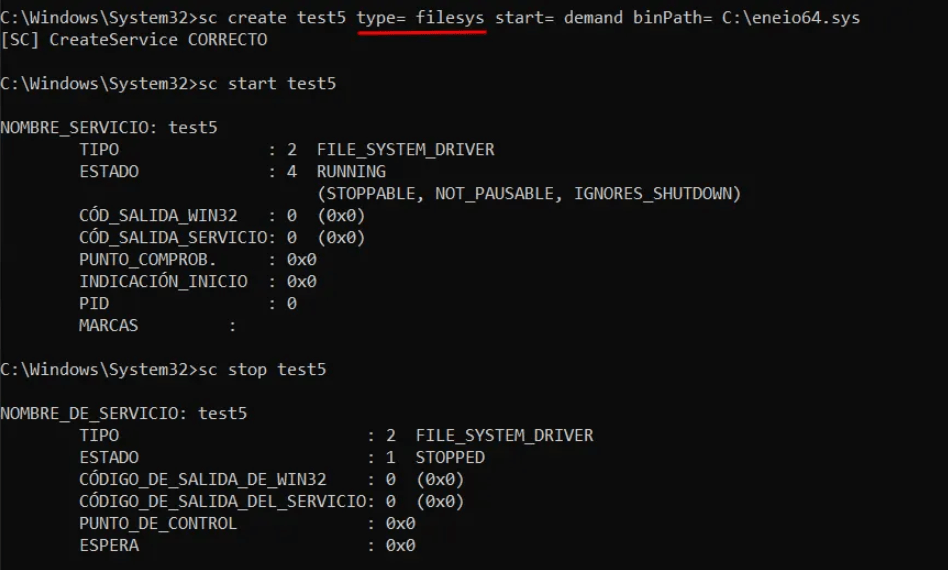

A frequently used detection rule flags the creation of services with type=kernel when performed via the sc.exe command-line tool. Below is an example:

This is more difficult to bypass because sc.exe typically requires type=kernel to load a kernel-mode driver. According to Microsoft documentation, there is an alternative service type (type=filesys) for file system drivers.

Digital signature

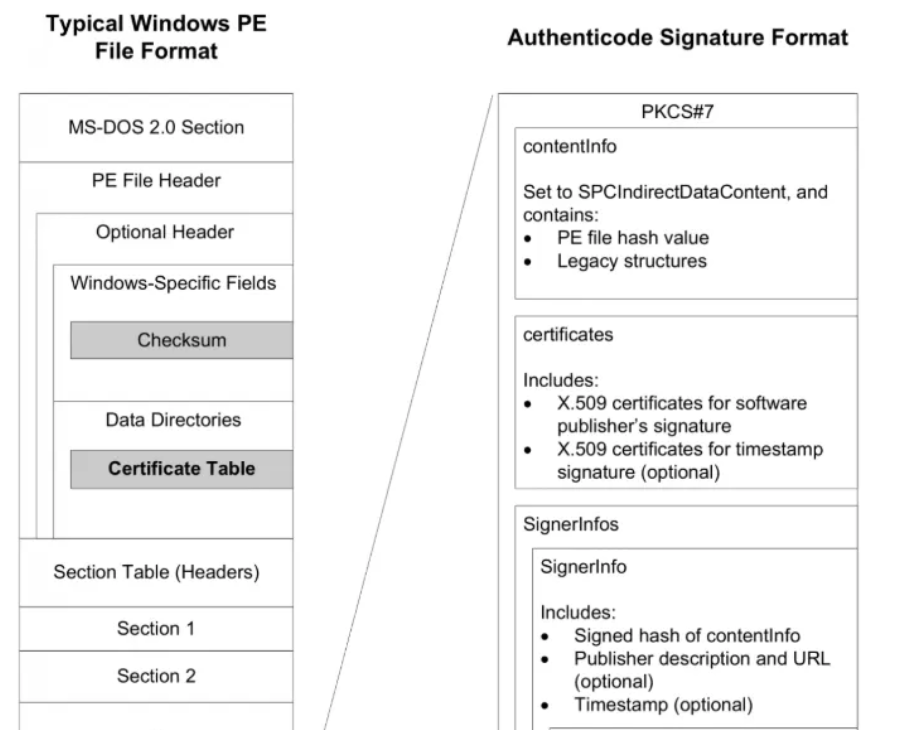

A digital signature for a Windows driver is a cryptographic mark that confirms both the authenticity and integrity of the driver. In other words, it tells Windows that the driver really comes from the stated manufacturer and hasn’t been altered since it was signed. Without this signature, Windows may block the driver from being installed

The process starts with the developer creating the driver. Before distribution, the driver is signed using a certificate issued by a trusted certificate authority. This certificate contains a private key used to create the signature, which Windows can later verify using the corresponding public key. During installation, Windows checks the signature and ensures that it is valid and trusted. If any part of the driver is modified after signing, the signature becomes invalid, and Windows will warn the user or prevent installation.

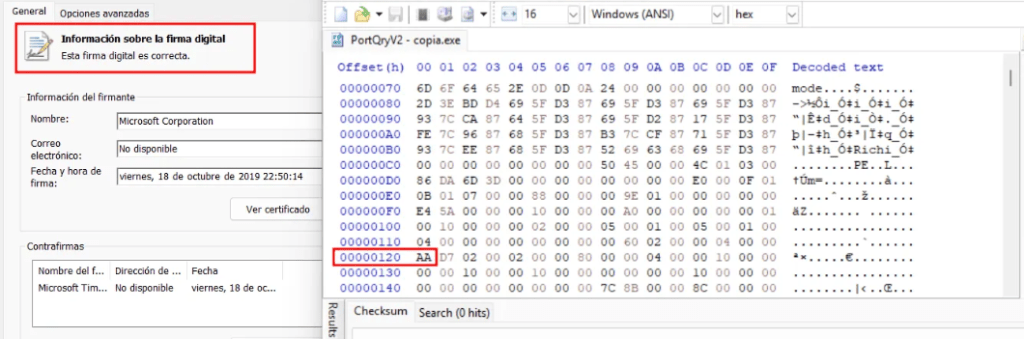

Well, that’s the theory. In practice, however, there have been ways to modify a driver’s hash without affecting its digital signature. In other words, the driver remains signed and appears trustworthy. As can be seen in the following image, there are several fields that are excluded during the hash calculation process.

This is not only possible with .sys files, but can also be done with any PE (Portable Executable), such as .exe or .dll files. Let’s look at some examples:

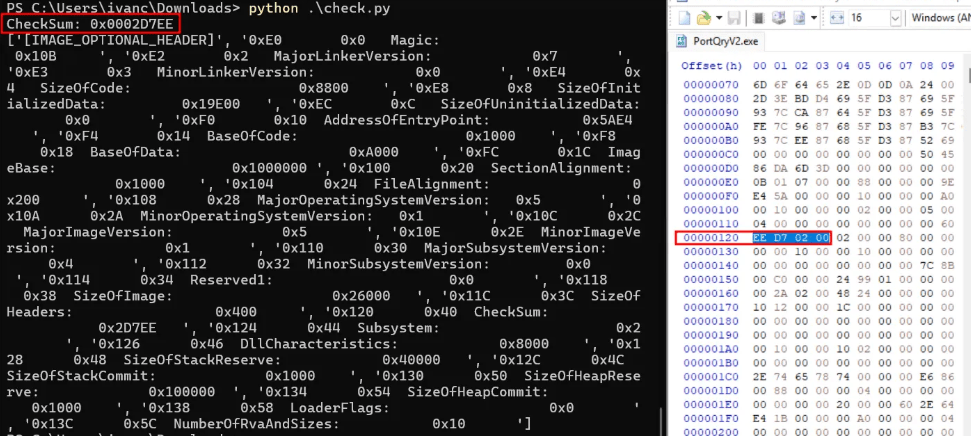

In these examples, we will modify the Checksum field of a PE file. But before we begin, what exactly is a checksum?

When the Portable Executable (PE) format was created, network connections were far less reliable than they are today, making file corruption during transfer a common problem. This was especially risky for critical files like executables and drivers, where even a single-byte error could crash the system. To address this, the PE format includes a checksum field designed to detect file corruption and reduce the chance of running broken code. A checksum is a simple hashing calculation that produces a unique value based on a file’s contents. Even small changes in the file result in a very different checksum, making it a useful way to spot modifications or errors. While hash collisions are possible, they are rare, so checksums are generally effective for integrity checks.

You can create a script that displays the checksum value and then modify it using a hexadecimal editor. For this example, an EXE format PE will be used, but it would have the same effect on a DLL or SYS.

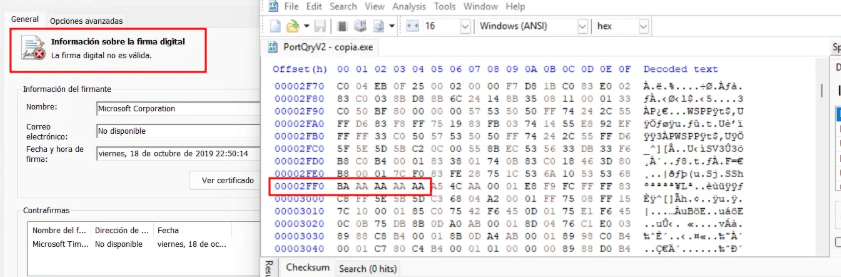

As shown in the image below, modifying random values in the binary invalidates the signature.

On the other hand, if we modify the values inside the checksum field, we can alter the hash while keeping the digital signature valid.

The same can be done using the WIN_CERTIFICATE structure.

Using these techniques, we can evade some of the detection rules implemented by defensive equipment, but we cannot evade Microsoft’s list of blocked drivers, since that list includes Autentihash and not hashes such as MD5, SHA1, or SHA256, which are the ones we can modify using this technique.

Conclusion

These techniques are intriguing from a research perspective, and while they can sometimes be applied successfully in practice, some organizations do not enforce very strict rules for detect vulnerable driver loading. The main reason is that such restrictive policies would likely generate a significant number of false positives, which could disrupt normal operations, increase administrative overhead, and reduce overall system usability. As a result, companies often adopt a more balanced approach, prioritizing operational continuity over the strict enforcement of these controls, unless they are operating in highly sensitive or security-critical environments.