Overview

Agentic AI development is undergoing a rapid uptake as organizations seek methods to incorporate generative AI models into application workflows. In this blog, we will look at the components of an agentic AI system, some related security risks, and how to start threat modeling.

Agentic AI means that the application has agents performing autonomous actions in the application workflow. These autonomous actions are intrinsic to the normal functioning of the application. Writing an application that uses an AI model via an API call—to supplement its operation but without any autonomous aspect—is not considered agentic.

You can think of the agents in an agentic AI application to be analogous to a simulated human that’s performing some specific goal or objective. The agents in the system will be configured to access tools and external data, often via a protocol, such as Model Context Protocol (MCP). An agent will use the information advertised about an external tool to decide whether the tool is optimal for achieving that agent’s specific goal or objectives. Agents will act as task specialists with a specific role—to solve a specific part of the workflow.

Having autonomy means that the agent will not necessarily follow the same workflow each time during operation. In fact, if an application developer/architect is looking for a deterministic (and thus, more algorithmic, execution), then an agentic AI based implementation is not a good choice.

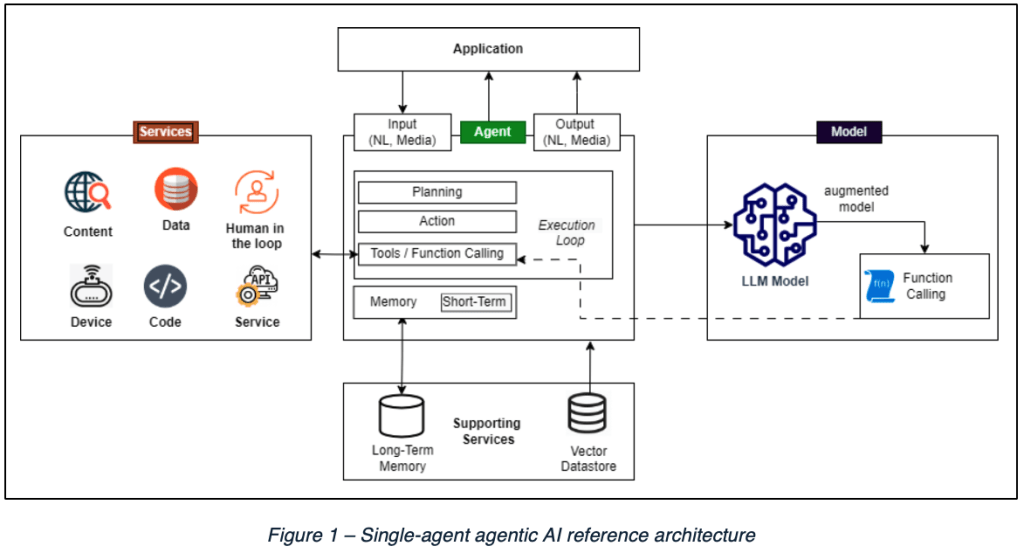

In the diagram below, the Open Web Application Security Project (OWASP) shows us a reference architecture for a single-agent system. This helps to give us a better sense of the autonomous aspects of an agentic AI implementation. The actual agent components in the continuous agentic-execution loop include:

- Planning

- Action

- Tool selection and function calling

You can see in this simplified view how the agent will have access to external services via the agentic tools, and that components also include some form of short-term memory and vector storage. The concept of using a vector storage database is important because it is central to how Retrieval Augmented Generation (RAG) works, with large language model (LLM) responses augmented by RAG at inference time.

Communications to an agentic AI-based application are likely going to be using some form of JSON/REST API to the agent from, say, a web frontend or to an orchestrator agent, in the case of multi-agent systems.

LLM Interactions Are Like Gambling

LLMs are non-deterministic by nature. We can easily observe this phenomenon by using a chat model and supplying the same prompt multiple times to a chat session. You will discover that you do not get the same results each time, even with the most carefully crafted and explicit prompts and instructions.

Further complicating the non-deterministic challenge is how easy it is to attack LLMs using social engineering. Although guardrails are typically in place in both model training and at model use (inference), with some creativity, it is not difficult to evade guardrails and convince the LLM to generate results that reveal sensitive data or generate inappropriate content.

A typical prompt for an LLM is broken into two components: one is the “system prompt” and the other is the “user prompt.” As with all LLM prompting, the system prompt is typically used to set a role and persona for the model and is prepended to any user-prompt activity. A known security risk can occur, whereby a developer thinks that the system prompt is a secure place to store data (for example, credentials or API keys). Using social engineering tactics, it is not difficult to get an LLM to reveal the contents of the system prompt.

Most LLM usage is very much like speaking with a naïve child or an inexperienced intern. You must be very explicit in your instructions, and the digitally simulated reasoning from the generative pretrained transformer (GPT) architecture might still get things wrong. This means that creating the prompting aspects of any agentic AI implementation is going to be a time-consuming, iterative process to achieve a working result that will never truly be 100% accurate.

Autonomous Gambling with Agents

In an agentic AI application, there are potentially many agents that are interacting with LLMs that yield a multiplicative effect surrounding non-determinism. This means that building an application testing plan for such a system becomes very difficult. Further amplifying this challenge is the adoption of MCP servers to perform tasks—tasks that might be third-party, remote services not under the authorship of the organization developing the application.

MCP is a proposed standard for agentic tool communications using JSON RPC 2.0 introduced by Anthropic in November of 2024. An MCP server has different embedded components that include:

- Resources: Data sources that provide contextual information to AI applications (e.g., file contents, database records, API responses)

- Tools: Executable functions that AI applications can invoke to perform actions (e.g., file operations, API calls, database queries)

- Prompts: Reusable templates that help structure interactions with language models (e.g., system prompts, few-shot examples)

MCP servers can run as local endpoint entities, remote (over network) entities in a server, or hosted services. Unfortunately, the MCP proposed was entirely focused on functionality with little regard to security risks. Potential security concerns include:

- Limited or No Role-Based Access Control (RBAC):

- After an MCP server is authenticated, there are no standard RBAC controls in place (unless developed by the implementer).

- Over-privileged credentials/tokens/API keys are often granted to an MCP server.

- Identity Spoofing / Imersonation:

- Typical MCP servers act as middleware proxying requests to some other API, such as Slack messaging or a calendaring API.

- There was no proposed method of identity management and authorization in the original specification. However, OAuth now has several applicable RFCs that address these concerns (that are now amended in MCP standards).

- It is not clear how pass-through authentication from applications should be implemented.

- Insufficient Logging and Monitoring: Most examples of MCP servers just do not implement logging at all, and developers are prone to take the shortest path to success.

- Unvetted MCP Server Tools: The danger of using a remote MCP server that’s advertised to perform the functions you need can be risky; how do you know it can be trusted?

- Lack of Human Approval in Workflows: In the case of agentic use, often, no human approval will be obtained for intermediate goals achieved by agent components.

The Cloud Security Alliance (CSA) has sponsored the authoring of a Top 10 MCP Client and MCP Server Risks document, which is now maintained by the Model Context Protocol Security Working Group.

| Risk | Title | Description | Impact |

| MCP-01 | Prompt Injection | Malicious prompts manipulate server behavior (via user input, data sources, or tool descriptions) | Unauthorized actions, data exfiltration, privilege escalation |

| MCP-02 | Confused Deputy | Server acts on behalf of the wrong user or with incorrect permissions | Unauthorized access, data breaches, system compromise |

| MCP-03 | Tool Poisoning | Malicious tools masquerade as legitimate ones or include malicious descriptions | Malicious code execution, data theft, system compromise |

| MCP-04 | Credential & Token Exposure | Improper handling or storage of API keys, OAuth tokens, or credentials | Account takeover, unauthorized API access, data breaches |

| MCP-05 | Insecure Server Configuration | Weak defaults, exposed endpoints, or inadequate authentication | Unauthorized access, data exposure, system compromise |

| MCP-06 | Supply Chain Attacks | Compromised servers or malicious dependencies in the MCP ecosystem | Widespread compromise, data theft, service disruption |

| MCP-07 | Excessive Permissions & Scope Creep | Servers request unnecessary or escalating privilege | Increased attack surface, greater damage if compromised |

| MCP-08 | Data Exfiltration | Unauthorized access or transmission of sensitive data via MCP channels | Data breaches, regulatory non-compliance, privacy violation |

| MCP-09 | Context Spoofing & Manipulation | Manipulation or spoofing of context information provided to models | Incorrect model behavior, unauthorized actions, security bypass |

| MCP-10 | Insecure Communication | Unencrypted or insecure communication channels (weak TLS, MITM) | Data interception, credential theft, communication tampering |

MCP Servers – Top 10 Security Risks

For agentic application development, there are several agentic AI frameworks, many of which are focused on agent orchestration. Model context protocol is useful for implementing tools that access external resources, but further orchestration is needed, especially in multi-agent architecture. Examples of orchestration frameworks include:

Also, there are more cloud provider-centric approaches for agentic orchestration, such as AWS Strands and Microsoft Semantic Kernel. While almost all the various frameworks are Python language centric, Microsoft has chosen to integrate into their .NET ecosystem, with C# being its foundational language.

Google has proposed Agent-to-Agent (A2A) protocol to further complement multi-agent development and supplement MCP. As the name suggests, A2A allows different agents to communicate with each other using a standard communications method.

Threat Modeling Agentic AI Applications

Existing threat modelling frameworks, such as Microsoft STRIDE, LINDUN or PASTA, are not quite comprehensive enough to cover the unique nature of agentic AI applications. With AI applications, we see threats, such as adversarial machine learning and data poisoning. We also see, as mentioned above, the dynamic, non-deterministic nature of LLM usage within agentic AI applications, as well as the autonomous nature of agents.

MAESTRO (Multi-Agent Environment, Security, Threat, Risk, and Outcome)

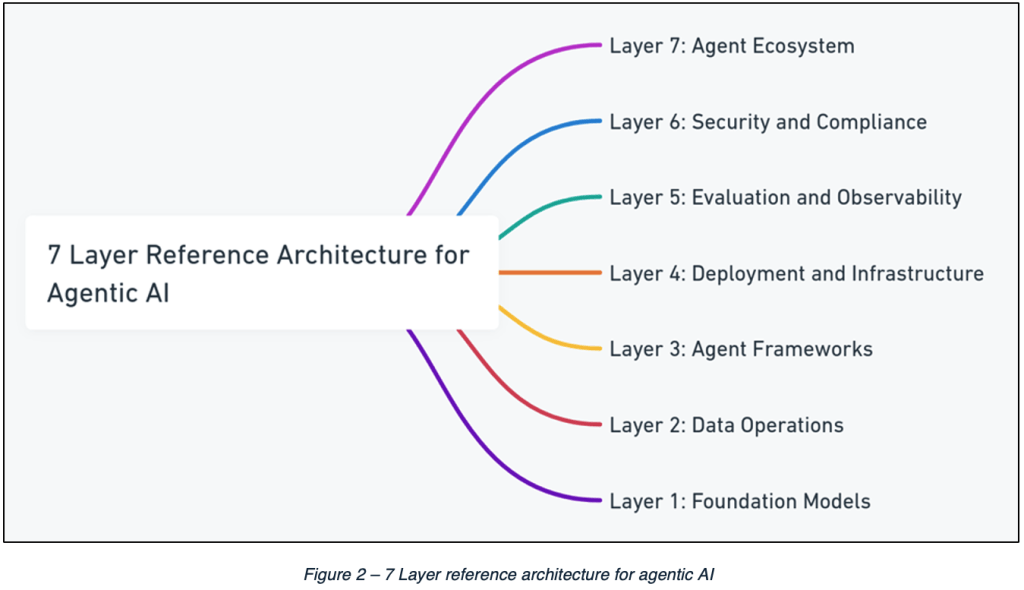

MAESTRO is built around a seven-layer reference architecture for agentic AI applications:

Given these weaknesses in existing frameworks, the cloud security alliance proposed MAESTRO in February 2025. This methodology was published in a blog authored by Ken Huang, the CEO of DistributedApps.ai.

The descriptions of these layers (as written in the blog post) are as follows:

- Layer 7: Agent Ecosystem: The ecosystem layer represents the marketplace where AI agents interface with real-world applications and users. This encompasses a diverse range of business applications, from intelligent customer service platforms to sophisticated enterprise automation solutions.

- Layer 6: Security and Compliance: This vertical layer cuts across all other layers, ensuring that security and compliance controls are integrated into all AI agent operations. This layer assumes that AI agents are also used as a security tool.

- Layer 5: Evaluation and Observability: This layer focuses on how AI agents are evaluated and monitored, including tools and processes for tracking performance and detecting anomalies.

- Layer 4: Deployment and Infrastructure: This layer involves the infrastructure on which the AI agents run (e.g., cloud, on-premise).

- Layer 3: Agent Frameworks: This layer encompasses the frameworks used to build the AI agents, for example toolkits for conversational AI, or frameworks that integrate data.

- Layer 2: Data Operations: This is where data is processed, prepared, and stored for the AI agents, including databases, vector stores, RAG (Retrieval-Augmented Generation) pipelines, and more.

- Layer 1: Foundation Models: The core AI model on which an agent is built. This can be a large language model (LLM) or other forms of AI.

The blog post also includes a section on how to apply the MAESTRO methodology, using the following step-by-step approach:

- System Decomposition: Break down the system into components according to the seven-layer architecture. Define agent capabilities, goals, and interactions.

- Layer-Specific Threat Modeling: Use layer-specific threat landscapes to identify threats. Tailor the identified threats to the specifics of your system.

- Cross-Layer Threat Identification: Analyze interactions between layers to identify cross-layer threats. Consider how vulnerabilities in one layer could impact others.

- Risk Assessment: Assess likelihood and impact of each threat using the risk measurement and risk matrix, prioritize threats based on the results.

- Mitigation Planning: Develop a plan to address prioritized threats. Implement layer-specific, cross-layer, and AI-specific mitigations.

- Implementation and Monitoring: Implement mitigations. Continuously monitor for new threats and update the threat model as the system evolves.

OWASP Agentic AI Threats and Mitigations

OWASP continues to be a great resource for distilling security risk information into tactical and highly accessible documents. In that tradition, OWASP has published a Top 10 list for LLM applications and their generative AI security initiative.

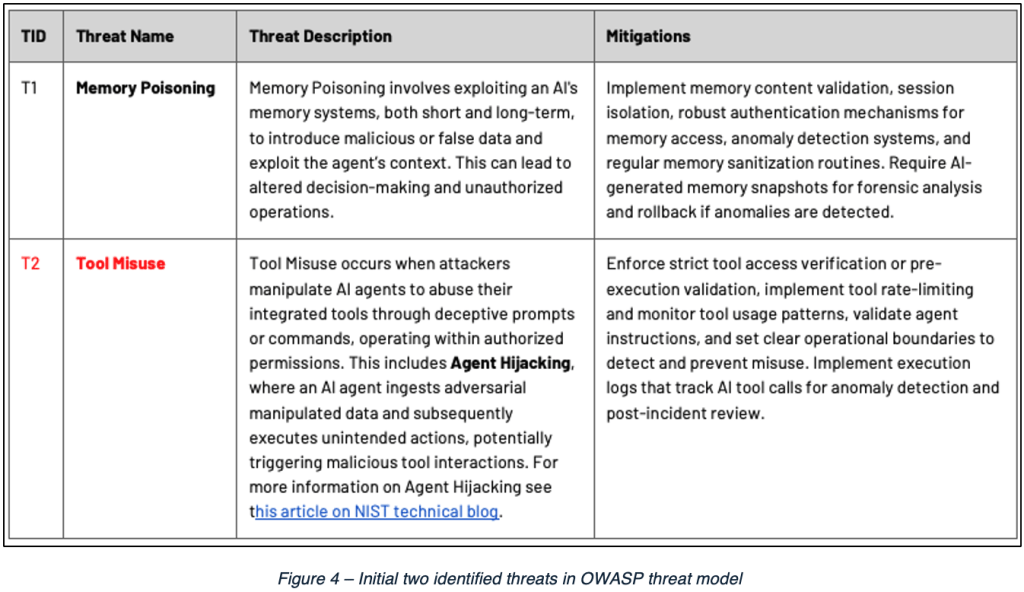

As a part of this effort, OWASP has documented a threat model against their own agentic AI reference architecture diagram, with 17 different identified threats.

What I find particularly appealing about this approach is that, by mapping threats to an architecture, OWASP gives a sense of the context in which their documented threats in the model are most applicable.

For each of these threats, OWASP includes a table with a threat identifier, threat name, description, and possible mitigations. While I will not list all these identified threats, below is a screenshot of the first two threats in the table.

Conclusions

I think that cybersecurity professionals need to zoom out and consider the broader perspective of what is happening around us. There is no doubt that we are living through a dramatic, paradigm-shifting change in Information Technology at present, and that we are firmly in a rapid adoption hype cycle.

As organizations adopt these technologies, there is going to be the usual tendency to use AI for all things—the hammer, if you will, with all problems around us as the nail. Adopting an agentic AI implementation in all areas is not always the right solution.

Accompanying this is the rapid uptick in AI-assisted programming (vibe coding), which is going to continue introducing vulnerabilities into end products. This issue alone is largely about insufficient data provenance in training models, poor LLM prompting skills on behalf of developers, and the pressure to deliver even faster based on the task acceleration enabled by code-generating AI models.

In recent years, I have seen examples of web application scaffolding that surrounds AI agentic implementation be more vulnerable than prior web applications. It is frustrating to experience a backslide in overall security during this rush-to-adoption period.

We have moved from a world whereby most technology solutions were deterministic to a world whereby a significant proportion of our software components are non-deterministic (as is the digitally simulated reasoning aspect of LLMs). Like in the early days of the explosive dot-com growth period in the late 90s, or the rapid adoption of cloud technologies in the 21st century, mistakes will be made.

In the cybersecurity community, we have traditionally been bad at several things (and have not really come up with good solutions to some thorny problems). Two standout issues that concern me most are: managing non-human identities for agentic tools to use; and managing the risk present with autonomous simulated humans in the mix. In fact, any sort of autonomy present in agentic AI implementations will increase security risk.

Organizations must decide what mix of autonomous agent-driven, non-deterministic components and good old algorithmic, deterministic components should exist in their software implementations. The risks should be clearly explained so that decision makers can decide what their risk appetite is, and what components of an application are well-suited for agentic AI implementation. The components that are better implemented in a more deterministic fashion should also be identified. Sometimes less is more!

As we look at the new risks in front of us with agentic AI, there is a tendency to react strongly and just say, “No, don’t do that” to interested organizations. We need to keep our professional consulting hats on and modify this answer to, “Yes, you can do that, but here are the risks . . .”

Cybersecurity, while incredibly important, represents overhead costs to organizations, so we must continue to carefully enable business activity while informing on risk levels and risk mitigation steps as we move through this paradigm shift.