In the prior two blogs within this UEFI Vulnerability Analysis using AI series, I described my research on analyzing enormous codebases, starting with UEFI firmware. The first two articles dealt with extending the open-source Large Language Model (LLM) token context window (effectively, what the LLM can hold in memory all at one time). In this article, the use of Knowledge Graphs to improve AI reasoning powers is investigated on the NVIDIA DGX Spark.

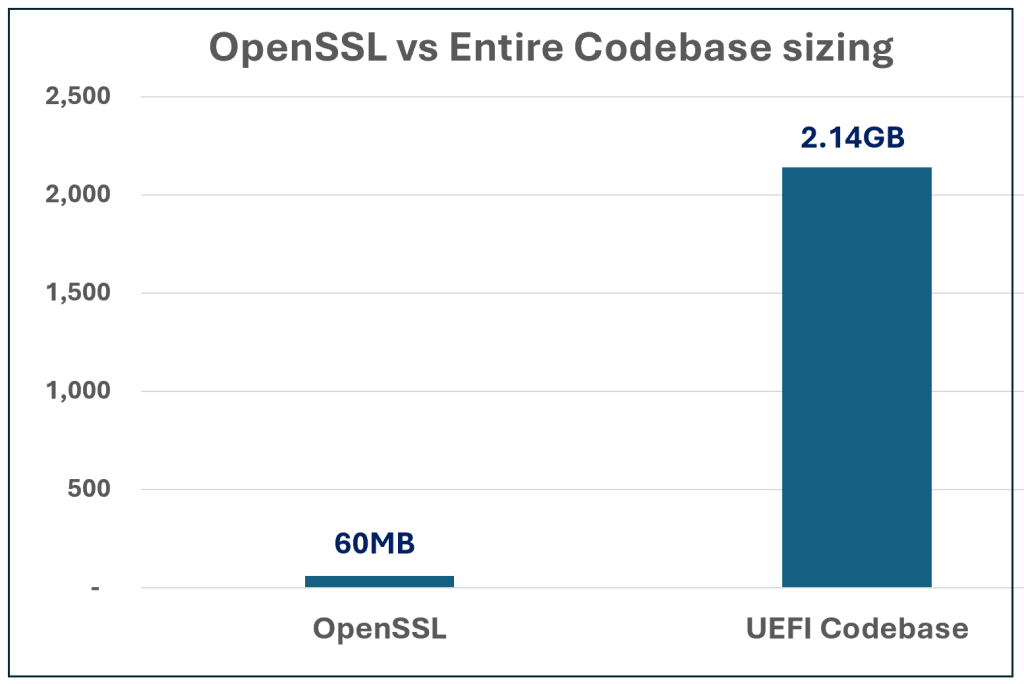

In Part 1, I used the ChatGPT frontier model to analyze the OpenSSL module within an older version of the open-source Tianocore EDKII UEFI build. ChatGPT did a very good job of detecting the vulnerabilities in this older (August 2021) release. But it chokes on doing anything much larger than the OpenSSL module, which is a tiny fraction of the total UEFI image—due mostly to token context window and file upload size limits. You can see the challenge visually below:

Caption: Relative size of the OpenSSL module to the entire UEFI codebase

In Part 2, subtitled Breaking the Token Barrier, I described my first attempts to use a private LLM on my DGX Spark to analyze larger portions of the UEFI source code base for vulnerabilities. Here, I discovered obstacles:

- Today’s models haven’t been trained on much of the low-level documentation (like the public Intel Software Developers Manual, and the ASSET InterTech SourcePoint JTAG-based debugger’s User Guide) needed to grok (forgive the pun) low-level UEFI firmware.

- Retrieval-Augmented Generation (RAG) doesn’t work properly on much of this documentation. Inference implodes, and hallucinations result.

- Expanding context and file upload size for the large UEFI codebase is difficult in many of the common LLM interfaces, such as Open WebUI, LM Studio, and others.

So, in this article, I’ve taken a “side-trip” to address the limitations of RAG and improve the inference reasoning power of my models. I’ll do this by using Knowledge Graph (KG) technology.

So, you may ask, what is KG technology, and why use it as opposed to plain old text vector databases? That’s a very good question.

Standard text databases treat data as rows and columns (in as many dimensions as is needed), whereas KGs organize facts into a network of interconnected entities, and are built using “triples”, which are:

- Nodes (Entities): People, places, objects

- Edges (Relationships): Lines connecting Nodes that define how they relate

- Properties: Additional details or attributes attached to Nodes or Edges

Viewing data in KG form addresses subtle “blind spots” found in LLMs: specifically, grounding facts and reducing hallucinations, and adding insight through meaningful relationships. Vector databases only know if two pieces of text are mathematically close to each other in a vector space; whereas KGs allow for deterministic logic, knowing how Node A relates to Node B (i.e., the CEO of “X” is located in “Y”).

Confusing? A picture is worth a thousand words, so let’s begin with the end in mind. Below is a short video that captures a simple KG implementation:

Caption: Video of Knowledge Graph for SourcePoint User Guide

As a trial run, I decided to apply KG to ASSET InterTech’s SourcePoint JTAG-based debugger’s User Guide. This document, much like the Intel SDM, contains specialized information that does not appear to be “baked in” to any generally available LLM currently on the web. Applying superior reasoning powers to the esoteric data within these documents will aid in the accuracy and quality of the large codebase analysis.

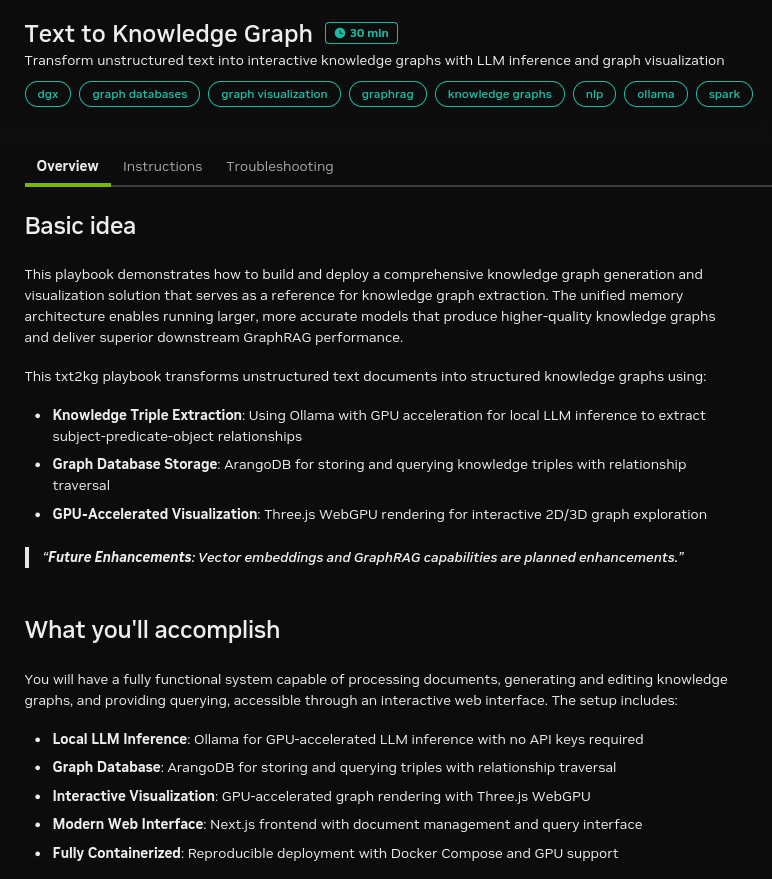

On the DGX Spark, NVIDIA provides a convenient set of Playbooks that help engineers explore and learn about many AI technologies that make up the NVIDIA stack. One of the playbooks is entitled Text to Knowledge Graph (named txt2kg hereinafter). Here’s the overview:

Caption: Text to Knowledge Graph Playbook Instructions

Author’s Note: You might miss it, but the overview section has in the fine print, “Future Enhancements: Vector embeddings and GraphRAG capabilities are planned enhancements.” So, we’ll need to wait a bit until all of the power of Knowledge Graphs for inference can be demonstrated.

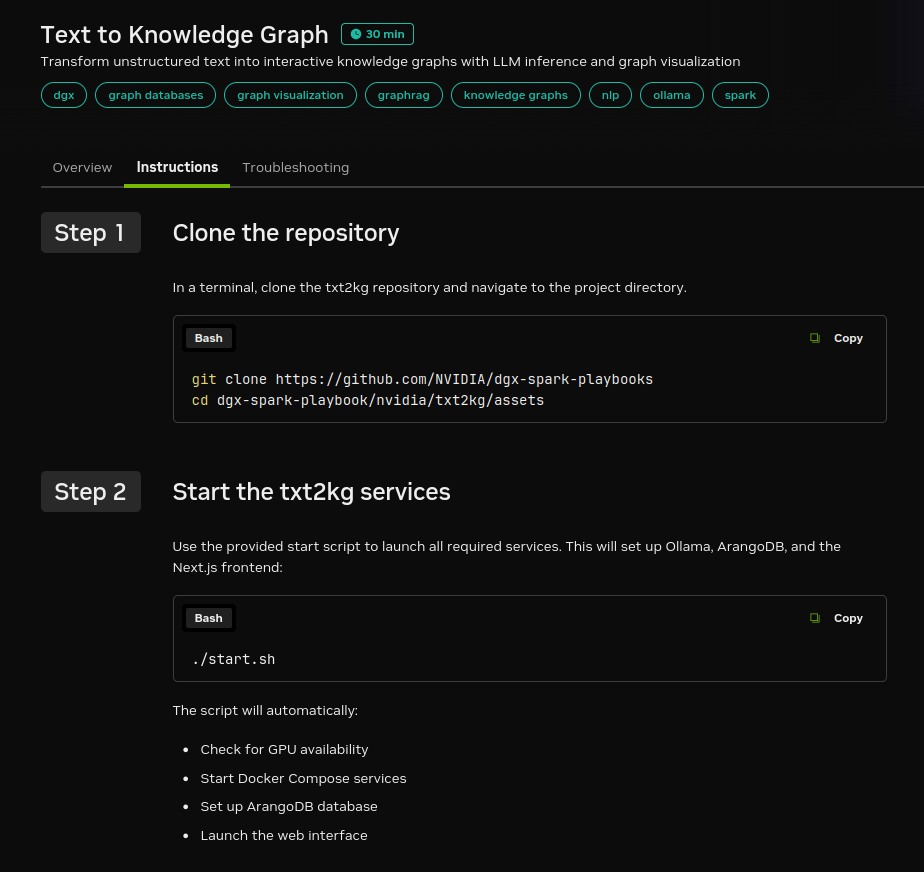

A set of instructions is provided, and they look fairly simple:

Caption: Instructions for txt2kg

And NVIDIA has a couple of videos that demonstrate the use of the txt2kg:

Turn Text Into a Knowledge Graph with 70B LLM on DGX Spark

I’m not a big fan of this one, although it’s short (about two minutes long), so you might as well watch it. It purports to demonstrate txt2kg on a 70B LLM (llama3.1:70b-instruct-q4_K_M) but there’s so much it doesn’t show, or “blurs” out to obfuscate what might be going on under the hood.

DGX Spark Live: Process Text for GraphRAG With Up to 120B LLM

This is worth a watch as well, and it’s longer (about 40 minutes). The only frustrating thing about it is that it purports to run on a 120B LLM (gpt-oss:120b), which is pretty large for the Spark, so we’ll see.

There’s also a couple of useful articles to read in the NVIDIA Forums that proved invaluable:

- Txt2kg Playbook ./start.sh –complete does not start Additional Services (Complete Stack)

- DGX Spark txt2kg playbook discrepancies / CPU fallback questions

Unfortunately, there’s a lot more that needs to be done beyond what’s covered in the above, and there are many pitfalls; I’ll describe some of my own experiences below, in the hope to save readers some time.

But first, it was necessary to divide up the SourcePoint User Guide into many separate constituent markdown (.md) files. txt2kg isn’t designed to swallow one monolithic PDF (in this case, 700+ pages worth, and 4MB in size). And it works with text (.md, .csv, .txt, and .json) only; a lot of the images that are in the SourcePoint User Guide can’t be consumed, and just clog up the works if you attempt to go further. As it turns out, these images aren’t really needed to put together a good KG for this document.

So, first, we’ll use the Docling application (readers may remember my first attempt to use this in Part 2 of this series, Breaking the Token Barrier), to change the PDF to a single markdown file.

As a separate note: from the Part 2 blog in this series, I found that the Intel SDM was not structured well for easy processing. The tables, in particular, don’t lend themselves to easy conversion to txt or md files. It’s a side project (Maybe hire an intern? Or use Copilot, Claude, etc.?) to put this into a form that Docling can easily convert without losing much of the value of the content.

The specific Docling command to run on the SourcePoint PDF is:

docling --to md SourcePoint.pdf --image-import-mode placeholder

The “–image-import-mode placeholder” removes all images.

And then, it’s necessary to chop up this monolithic markdown file into individual .md files for the separate sections within the SourcePoint User Guide. Whether by intent or good fortune, the writers of this document created it in a form that is easily split up by the following command:

csplit --digits=4 --prefix=SP_ SP.md '/^## /' '{*}'

This creates 2,976 individual markdown files, all separate sections with a ## header at the top of each. Perfect to create Nodes, Links and Attributes from!

SP_0000.md

SP_0001.md

SP_0002.md

and so on.

Now, it’s time to follow the instructions in the playbook. I’ll copy these, and editorialize where the instructions are unclear, wrong, or result in errors. Note that by the time you read this, many of these issues may have been fixed by NVIDIA (at least, I hope so).

Clone the DGX playbooks, move to the txt2kg assets directory, just as the documentation says. So far so good:

git clone https://github.com/NVIDIA/dgx-spark-playbooks

cd dgx-spark-playbook/nvidia/txt2kg/assets

Now here’s the first problem with the documentation. If you just follow the instructions as they are, and issue the:

./start.sh

it’ll never work. It only starts up some of the containers. You need to first run:

./start.sh --complete

You will see something like the following:

Checking for GPU support...

✓ NVIDIA GPU detected

GPU: NVIDIA GB10, [N/A]

Using Docker Compose V2

Checking Docker permissions...

✓ Docker permissions OK

Using complete stack (Ollama, vLLM, Pinecone, Sentence Transformers)...

Starting services...

Running: docker compose -f /home/alan/dgx-spark-playbooks/nvidia/txt2kg/assets/deploy/compose/docker-compose.complete.yml up -d

[+] Running 12/13

✔ Network compose_txt2kg-network Created

✔ Network pinecone Created

✔ Network compose_default Created

✔ Volume compose_ollama_data Created

✔ Volume compose_arangodb_data Created

✔ Volume compose_arangodb_apps_data Created

⠏ Container ollama-compose Starting

✔ Container entity-embeddings Started

✔ Container vllm-service Started

✔ Container compose-sentence-transformers-1 Started

✔ Container compose-arangodb-1 Started

✔ Container compose-arangodb-init-1 Started

✔ Container compose-app-1 Created

Error response from daemon: failed to set up container networking: driver failed programming external connectivity on endpoint ollama-compose (e191b0f4e3480d87a068ed110ca998ca66d93a46aef78b273f05203e3adf4c48): failed to bind host port for 0.0.0.0:11434:172.20.0.5:11434/tcp: address already in use

Author’s Note: Now, I’m not one to complain, but you would think that a company like NVIDIA, with a market cap of $5 trillion (at least today), would clean this up a little.

To clear up this daemon error about the container networking driver, I had to issue the command:

sudo lsof -i :11434

You’ll probably see something like:

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

ollama 2099 ollama 3U IPv4 3654 0T0 TCP localhost:11434 (LISTEN)

This is the problem, as ollama (which is running in the background and by default uses port 11434) must be kicked.

So, turn it off from listening to this port by:

sudo systemctl stop ollama

Then, start up its container again:

docker start ollama-compose

Then, re-issue the start command, this time without the –complete option:

./start.sh

At that point, you can download the LLMs you desire. I pulled down three of them (note that the llama3.1:8b is the default, but I also wanted to try out both smaller and larger models to benchmark performance):

docker exec ollama-compose ollama pull llama3.1:8b

docker exec ollama-compose ollama pull llama3.2:3b

docker exec ollama-compose ollama pull llama3.1:70b

Note that it can take the better part of an hour to pull down the 70 billion parameter model.

You can then launch the web interface via your web browser (I use Firefox):

http://localhost:3001/

And you are off to the races.

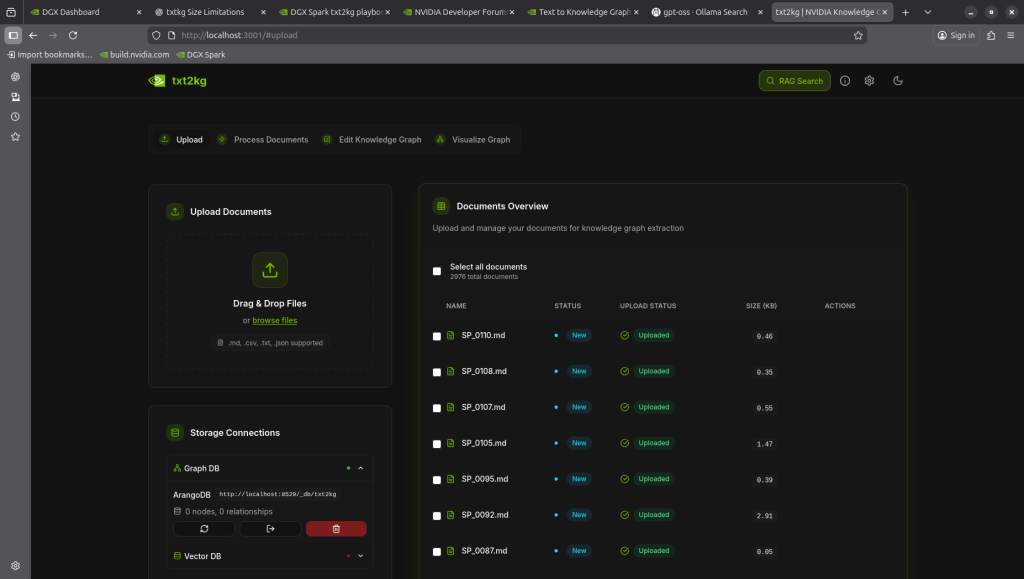

First do an “Upload Documents”:

Caption: Upload Documents Panel for txt2kg

It took about two minutes to load all 2,976 of the markdown files that make up the SourcePoint User Guide in this case. The UI is fairly primitive; there’s no progress bar or similar indicator to indicate that the system is working.

And that’s the general feel of this app, at least currently; it’s clearly a proof of concept and subject to some further polish, when NVIDIA gets around to it.

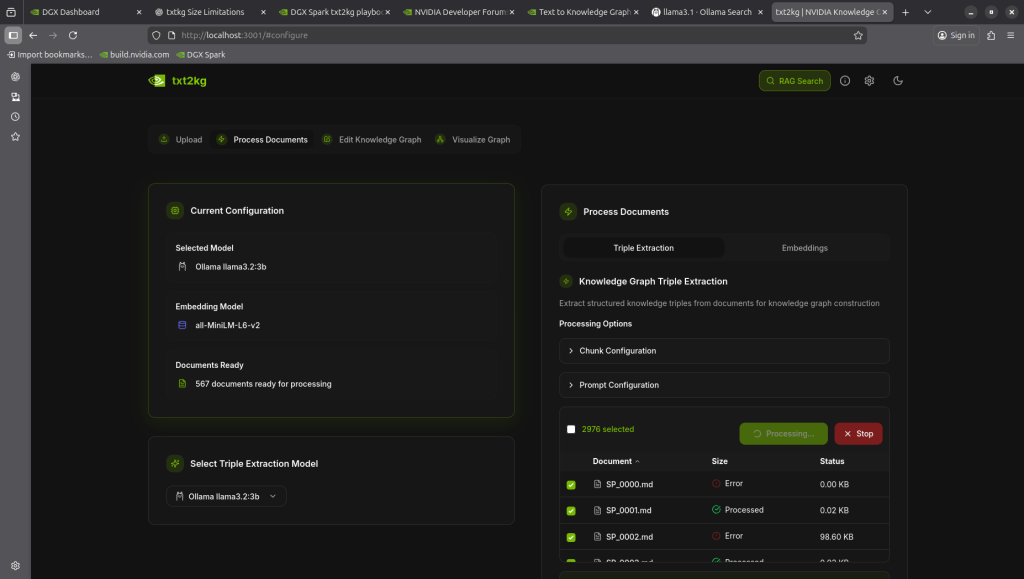

After the file upload, do a Process Documents and “Extract Triples”, Edit Knowledge Graph, and Visualize Graph. Here’s some pictures:

Caption: Process Documents

Note that in Process Documents, there are some of the files marked as “Error”. This is because some of these files were empty, had only ## in them, or contained information that the model (in above case, llama3.2:3b) contained extraneous. It’s interesting that llama3.1:8b accepted all markdown files, whereas llama3.2:3b was smarter.

It took 15 hours for llama3.1:8b to process all the documents. llama3.2:3b took only five hours.

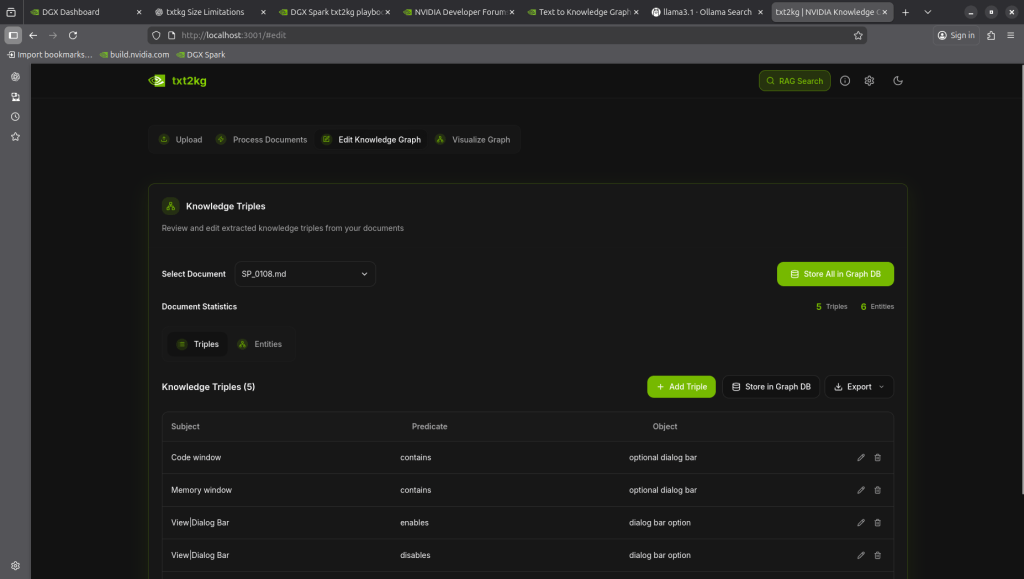

And then click on “Edit Knowledge Graph”:

Caption: Edit Knowledge Graph

I didn’t find the “Edit Knowledge Graph” panel to be too helpful. As I had close to 3,000 files to deal with, it would have been quite tedious to go into each of them and edit their triples.

But a word to the wise: this is where you save your graph database. If you don’t, you might be kissing it goodbye, and have to start over. That is not fun.

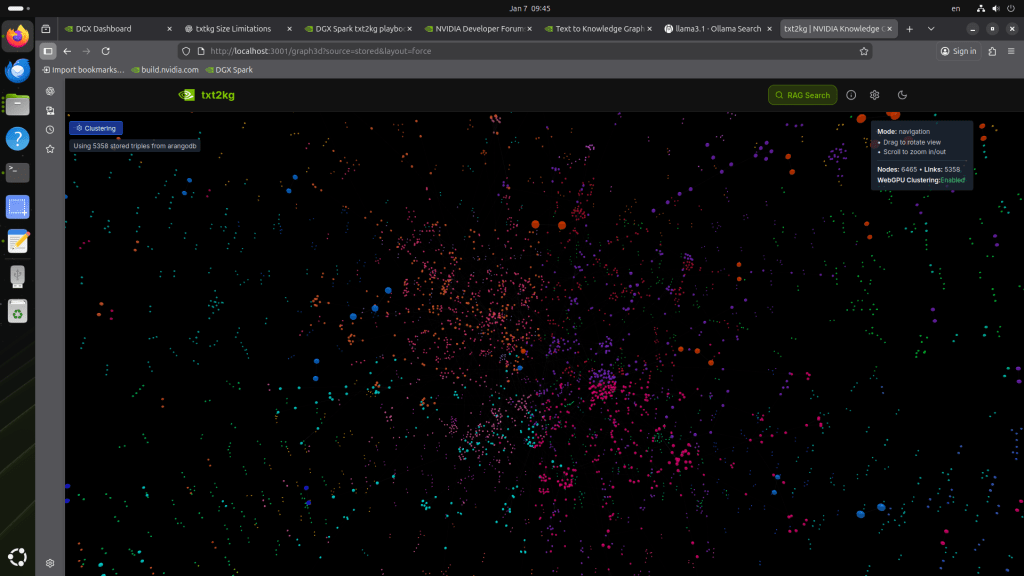

Once the graph DB is saved, click on “Visualize Graph”:

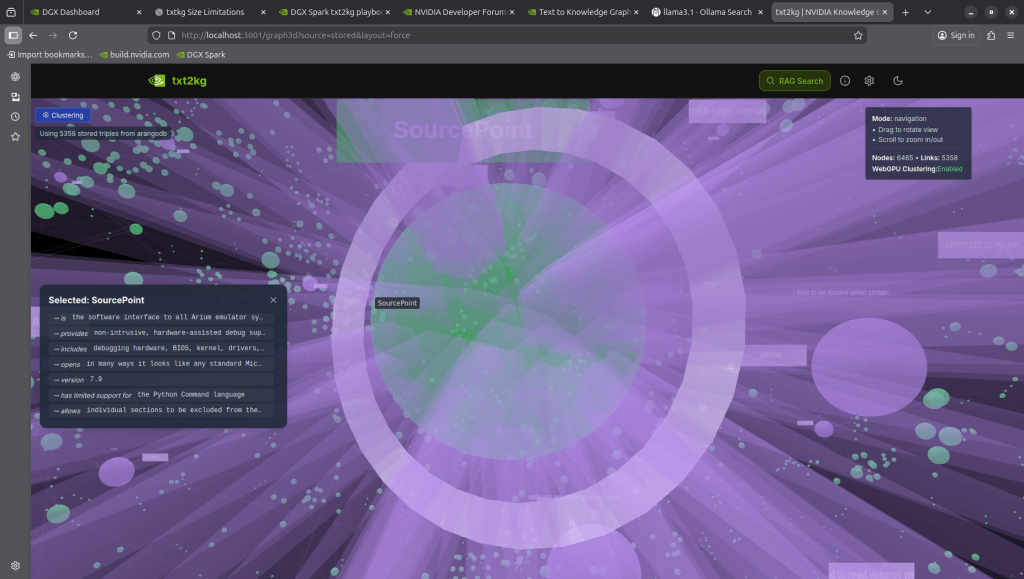

Caption: Visualize Graph

The “Visualize Graph” tab is the best part of the POC demo. Click on the “Clustering” button at the top left, and be sure to enable “Cluster Colors”, to see the graph in all of its glory.

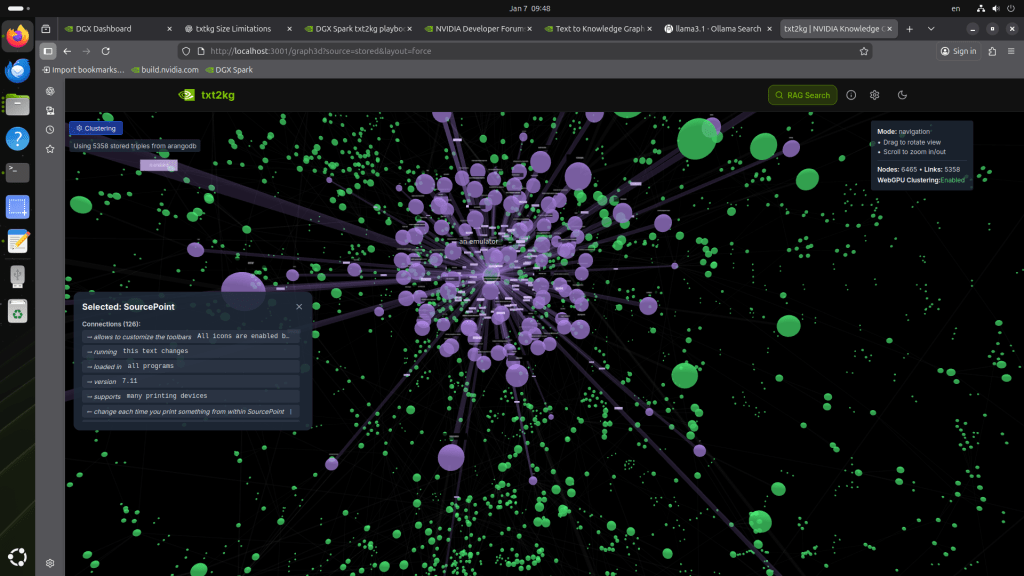

Caption: Visualize Graph with Links to SourcePoint Node

Clicking on a node that has lots of links gives insight into the connections between data.

Caption: An expanded view of Links to SourcePoint Node in Visualize Graph

And finally, just a nice image above as you click on a Node, in this instance “SourcePoint”, that has lots of connections to other data elements within the document.

Here’s that video again, using the llama3.1:8b default model, if you haven’t watched it from above:

Caption: Video of Knowledge Graph for SourcePoint User Guide

At this point, I’ll pause and complain a little bit. Take some of this with a grain of salt, because some of it may be my fault; and hopefully some of the real issues will be resolved in the near future, and be of some assistance to people following in these footsteps in the meantime:

Processing Documents is Slow!

If you follow the playbook instructions, you’ll load the llama3.1:8b model, which is the default, and things work smoothly, except for the fact that the “Extract Triples” took 15 hours to run! Even though everything indicated that the GPUs were being used for inference, according to htop, all of my CPUs were also running at 100%.

“Extract Triples” took only five hours on llama3.2:3b. Mind you, NVIDIA has been doing some optimizations on the Spark recently, and I did do a couple of software updates in between using these two models.

Problems with “Select Triple Extraction Model”

Despite loading up multiple models, “Select Triple Extraction Model” in the Process Documents page would only display four entries, and two of them were NVIDIA models: Nemotron Super 49B, and Nemotron Nano 9B v2:

Caption: Select Triple Extraction Model options

I know that I have more models available, with doing an “ollama list” within the container:

docker exec -it ollama-compose ollama list

NAME ID SIZE MODIFIED

llama3.1:70b 711a9e8463af 42 GB 39 hours ago

llama3.2:3b a80c4f17acd5 2.0 GB 40 hours ago

llama3.1:8b 46e0c10c039e 4.9 GB 40 hours ago

But neither of the NVIDIA models would work, either! When I did a pull on them and tried to select each as my triple extraction model, the app reported a need for “later” versions of ollama in order to run; and I know that I had the latest release of ollama on my DGX Spark.

Getting your added models to show up is tricky. I managed to “wake up” the browser list with a Shift-Ctrl-R once or twice. You might have to do an “rm” of the models in the container to stay within what seems to be a maximum limit of four (4) displayed.

Cleaning Up Before You Start Over

If you quit out and don’t follow a good “hygiene” routine, going back in and just doing a ./start.sh can leave everything in an indeterminate state. You’ll have orphan containers laying around, and other things in various states of disarray; the application will never restart cleanly.

I found that the only way to get txt2kg to restart cleanly was to use the “nuclear” version of the cleanup:

docker compose down

docker compose down -v

docker exec ollama-compose ollama rm <get rid of all of your models>

docker compose down -v –-remove-orphans

And even then, you might have issues with the browser cache, so do a hard reset on that:

Open http://localhost:3001

CTRL-SHIFT-I

Go to Application -> Storage

Right click to clear site data

Hard refresh (CTRL-SHIFT-R)

On one or two occasions, I found it necessary to also reboot my Spark. There’s some unclear references in the support documentation online to “zombie” unified memory states, and ollama discovery script timeouts while scanning the high-performance interconnects on the Spark. Ugh.

The above might be worst case. Hopefully this POC becomes more polished with time.

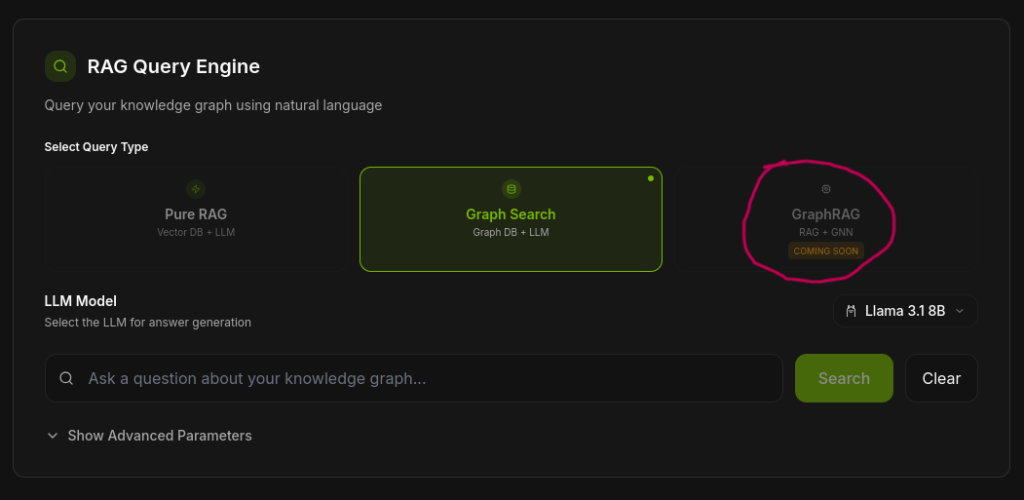

GraphRAG is Not Available Yet

Clicking on the “RAG Search” button at the top takes you to the RAG Query Engine, where you’re presented with three options: Pure RAG, Graph Search, and GraphRAG:

Caption: Retrieval-Augmented Generation (RAG) Search

With this, you can search your document instantly. Note that “Pure RAG (Vector DB + LLM)” on the left is not working. Our query now can use “Graph Search (Graph DB + LLM)”. And on the “GraphRAG (RAG -> GNN – graphical neural network)” there’s a small label that says “COMING SOON” (see the part circled in red). I hope so! GraphRAG will be able to answer questions that text-only RAG cannot, and will exceed even Graph Search in its reasoning capabilities. As ChatGPT says: “Pure RAG guesses, Graph Search explains, and GraphRAG reasons”. This might be hyperbole; but we will see.

AND FINALLY, HOW DID IT WORK?

Well, not as well as expected. I’m guessing that these small models, with their default tiny context windows, can’t really do the heavy lifting, even with the inferencing boost given from txt2kg, to compete with the frontier models. For example, when I fed ChatGPT the SourcePoint User Guide, and handicapped it by restricting its analysis solely to the document:

“Restrict your analysis to this, and only this, attached PDF. Given that, how do you go about placing a breakpoint at the address of a specific interrupt handler, by looking at the IDT?”

It responded very precisely and accurately:

Using only the attached SourcePoint 7.12 PDF, the workflow is:

- Open the Descriptors Tables window

- The manual says the Descriptors windows are used to examine/modify descriptor table entries and can be opened via View | Descriptors (or the toolbar icon). SourcePoint

- This window has tabs for GDT / IDT / LDT / LDTR, and explicitly calls out the IDT tab for viewing/modifying IDT entries. SourcePoint

- Select the IDT entry for the interrupt vector you care about

- In the IDT, each interrupt/trap gate entry contains a selector and an offset that “point to the start of an interrupt or trap routine.”

- So: find the desired vector’s gate descriptor and note its offset (and selector if you care about context).

- Jump from that IDT entry to code, then set the breakpoint

- The Descriptors window context menu provides “View as Code”, which “opens a Code window at the address of the selected descriptor table entry,” and then “breakpoints can be set through the open Code window.”

- Once you’re in the Code window at that handler entrypoint, set an execution breakpoint there (SourcePoint supports setting execution breaks from the Code window and via the breakpoint dialogs).

That’s the “IDT → handler address → breakpoint” path the manual describes: use Descriptors → IDT tab to get the handler pointer, then View as Code, then set the breakpoint in the Code window.

Whereas my model, well, not so well:

Based on the provided context from the SourcePoint knowledge graph, I found relevant information to answer your question.

To place a breakpoint at the address of a specific interrupt handler by looking at the IDT (Interrupt Descriptor Table), you can follow these steps:

- Locate the Interrupt Handler Address: Identify the address of the interrupt handler in the IDT. This can be done by examining the IDT entries, which are typically stored in memory.

- Set Breakpoint Name: In SourcePoint, create a breakpoint name using the syntax

Breakpoint name { e[nabled] | d[isabled] }. For example, if you want to enable the breakpoint, useBreakpoint name { e }. - Specify Address: Use the address of the interrupt handler as the location for the breakpoint. You can specify this by using the syntax

location = address, whereaddressis the memory address of the interrupt handler. - Set Breakpoint Options: In SourcePoint, you may need to enable the “Run continuous” option and choose the number of passes.

Here’s an example of how you might set up a breakpoint in SourcePoint:

- Create a breakpoint name:

Breakpoint name { e } - Specify address:

location = 0x12345678(replace with the actual address of the interrupt handler) - Set breakpoint options: Enable “Run continuous” and choose the number of passes.

Please note that this is a general outline, and specific details may vary depending on your SourcePoint version and configuration. If you’re unsure about any step or have further questions, I recommend consulting the SourcePoint documentation or seeking assistance from an expert.

That’s not very helpful!

So, where do we go from here? I managed to get both 3B and 8B models to run; could I really run the 120-billion-parameter gpt-oss:120b model on the Spark, as NVIDIA says you can; and get a big enough context window? That might be difficult. I was skeptical. That is a huge model, and I might run into as yet unforeseen, time-wasting difficulties. So, I decided to start with something a little more modest, the 70-billion-parameter llama3.1:70b first. I’ll write about that in a forthcoming blog post!