In my Part 1 article on this topic, I used the ChatGPT frontier model to perform something that was previously unthinkable: an accurate machine analysis of OpenSSL vulnerabilities in the open-source EDKII build for an Intel CPU. This article documents the research to extend the LLM context memory beyond that of just the OpenSSL code: in fact, to analyze the entire UEFI firmware codebase simultaneously. Imagine the subtle bugs and vulnerabilities that might be found, if the entire UEFI codebase could be kept in memory and inferenced at one time.

An LLM’s context window is the ultimate determinant of how much memory a given session holds: that is, how much can the model keep “in memory” before it starts to forget earlier context. Think of it in terms of the human brain: theoretically, we can remember everything from the current moment back to early childhood. Things might get fuzzy the further back we go; but at least in principle, if we took for example a Calculus course in college, we can probably do some simple derivatives even today in our head. More complex derivatives might take a few moments of self-study, but we’d be up to speed quickly.

This is an analogy at best, and I’m probably mixing my AI “training” and “inference” metaphors a little to make the point. But machines work similarly to the human brain: in some respects, the LLM context window represents an LLM’s memory of the information that has been fed to it, up to a point. This is ultimately dependent on the amount, in classical AI implementations, of the amount of VRAM that is available to the GPUs involved in the inference stage. But it is possible to work around that, as we’ll see.

In the earlier article, UEFI Vulnerability Analysis Using AI: Part 1, I did some basic analysis using ChatGPT of the CryptoPkg module within the Tianocore EDKII source tree, and it performed admirably. And when questioned, ChatGPT admitted:

My current context window is about 128k tokens (roughly 400–500 pages of text). That’s the maximum amount of text I can keep “in working memory” at once when analyzing a file or conversation.

Given the above constraint, and the fact that the CryptoPkg module itself is 65MB in size, with 18,668 files/folders therein, it’s a given that ChatGPT was doing some magic behind the scenes, or worse, silently disposing of earlier tokens and “forgetting” them as part of the analysis. This was not what I wanted. The goal is a transparent, clear use of an “open-source” downloadable LLM on my DGX Spark that I could tune specifically for the purposes of vulnerability detection in a huge codebase, striving for as much accuracy as possible; recognizing that there would be tradeoffs between reasoning power (LLM parameters), codebase size (token context window), system performance, and other factors.

So continued the journey to develop this solution, make as many mistakes as I could, trip over as many obstacles as possible, and fall into as many pits as I could find. Such is the fast-track hands-on way of becoming an AI developer!

On the DGX Spark, front-and-center in the Getting Started section that pops up when you first boot up the system is the Playbook for using the Open WebUI application with Ollama:

It was extremely easy to set up, and I’ve had a lot of experience with Ollama, so I decided to start this way – only to regret it, as you’ll see shortly.

But first, it was necessary to choose an Ollama model that would be well-suited to this purpose, with a large context window, and that would run comfortably on the DGX Spark. After some research, here’s a summary of what I found a few weeks ago:

| Here’s the top LLM for local large-codebase analysis (with tags, params, context, disk size, quant, and rough VRAM needs). 1️⃣ DeepSeek-R1-Distill-Llama-70B Why #1: best open-weight reasoning model right now, with 128K context and strong performance on code + general logic. Great for “understand this whole subsystem + design” type work. Ollama tag (typical): deepseek-r1:70b → currently deepseek-r1:70b-llama-distill-q4_K_M under the hood Ollama+2Ollama+2 Parameters: ~70.6B Context window: 128K tokens (Ollama tag lists 128K context window) Ollama Quantization (Ollama default): Q4_K_M (there’s also a q8_0 tag at ~75GB if you want higher fidelity) Ollama Size on disk (Ollama q4_K_M): ~43GB Ollama+1 VRAM you should plan for (quantized): For smooth use at 32K–64K context with everything on GPU: ~48–60GB With offloading (some layers on CPU/host RAM): workable on 24–32GB, but slower. Best use: Cross-file reasoning, understanding protocols/architectures, finding subtle bugs, mixing code + docs + logs. You can always let R1 “think aloud” then truncate the chain-of-thought if you don’t need verbosity. |

Sounds easy, right? Well, it wasn’t. In the interest of time, I won’t belabor all I went through, but I found that Retrieval-Augmented Generation (RAG) did not work reliably with Open WebUI, among other things. And the simplistic interface did not seem to have all the bells and whistles I would need to truly fine-tune a local LLM to do codebase analysis.

So, after punting on Open WebUI, after some research, I decided to move to LM Studio – this promised to be a low-code way of achieving the goal.

If you haven’t any experience with LM Studio yet, I’d highly recommend watching the YouTube video Correctly Install LM Studio on Linux Ubuntu and Host LLMs Using GUI, it will definitely save you time. Here’s a short list of things you need to do on the Spark to set it up:

Download the .AppImage installation file from www.lmstudio.ai/download?os=linux. At the time of this writing, it’s LM-Studio-0.3.33-1-arm64.AppImage.

Create an LMStudio folder in your home directory.

Copy the .AppImage file from the Downloads folder into the LMStudio folder.

Open a Terminal session, and navigate to the LMStudio folder with cd ~/LMStudio.

Type chmod u+x LM-Studio-0.3.33-1-arm64.AppImage.

Navigate to the newly created squashfs-root folder: cd squashfs-root

Type in:

sudo chown root:root chrome-sandbox

sudo chmod 4755 chrome-sandbox

Now you can directly invoke ./lmstudio from within this squashfs folder. You can watch the second half of the aforementioned video if you want to create an application icon to run the this more easily.

Now, LM Studio needs some configuration done in order to be suitable for analyzing large codebases. I decided to download and use the unsloth/DeepSeek R1 Distill Llama 70B model, as from the above, it’s very well-suited to this purpose. Retrieval-Augmented Generation (RAG) also needs to be activated, and this worked without any explicit error messages on the front end when I did a simple text file upload, unlike the implementation within Open WebUI. And I extended the token context window from the default of 4,096 to 128,000.

And based upon my experiences with Open WebUI, I decided first to do a more controlled analysis of the famous Intel Software Developers Manual (SDM), to see how this configuration compared to the experience using the public ChatGPT frontier model. This was helpful, because it helped me learn some key configurations that need to be done to have this local deepseek model (or any model) be competitive with ChatGPT.

I uploaded the Intel SDM, and provided the prompt:

Assume that you are an expert in x86/x64 architecture. Based on the attached SDM, please provide a description of the C80H MSR, listing its bitfields, as well as a detailed description of each.

But I received the following sad answer:

Sorry, I can’t find MSR C80H.

What the heck! I know that C80H is an Architecture MSR IA32_DEBUG_INTERFACE, which is common knowledge that anyone with a basic knowledge of JTAG on Intel would know. Why couldn’t it find it? I discovered, after some research, that this was due to improper (and frustratingly quiet) consumption of the SDM. It’s very large: 25MB and 5,252 pages in the current October 2025 release; and the tables in particular don’t lend themselves to easy parsing. The LM Studio front-end parser had problems accurately consuming its content, particularly in table form, without losing context. So, first I changed the entire document into a clearer markdown form that could be understood by the model, using IBM’s docling application. I changed the PDF to an MD file that I thought would be ideal, but it took 69 minutes to run on my DGX Spark, and the output still could not be consumed by RAG properly. So, I gave up on docling.

I would have to do some more work to achieve parity between my local model and the frontier models like ChatGPT. 😊

But wait! Using the scientific method, I went back to ChatGPT and explicitly asked the same question for the C80H MSR, and got the following almost equally sad answer:

MSR C80H (0XC80) is IA32_SGXLEPUBKEYHASH0

That’s wrong too! So even ChatGPT hallucinates on the SDM. It gave me the same answer even when I set its Temperature to 0.0, which should eliminate such hallucinations. The SDM document is a bear! But it contains much valuable information that is needed to accurately analyze UEFI and OS codebases, so I need to figure this out. Fine-tuning my LLM on the Intel SDM is on my to-do list. Clearly, if we’re analyzing low-level firmware on Intel and AMD platforms, having context of the MSRs and low-level x86 architecture as documented in the SDM is essential.

And then an NVIDIA announcement caught my attention: the introduction of a new Nemotron 3 Nano model with a 1 million token context! Now, it’s rated to support 30 billion total parameters, but with only 3.5 billion active at a time for low-latency hybrid Mixture-of-Experts (MoE) inference. What this means is that only ~3B parameters “wake up” per token at inference time; note that this is not new (Switch Transformers, GLaM, and Mixtral now support this kind of methodology), but NVIDIA is the first to combine it with long-context optimizations. So, it can consume 1M tokens without crashing, BUT only with coarse awareness of very large documents/contexts/codebases. You’d use a good reasoning model, like deepseek, to zero in on 128K “mini-contexts” if/as needed. That would be a good combination.

AND, at the time of this writing, I found out that only Nemotron 3 Nano, of all the models now available, has trained on the recent October 2025 version of the Intel SDM!

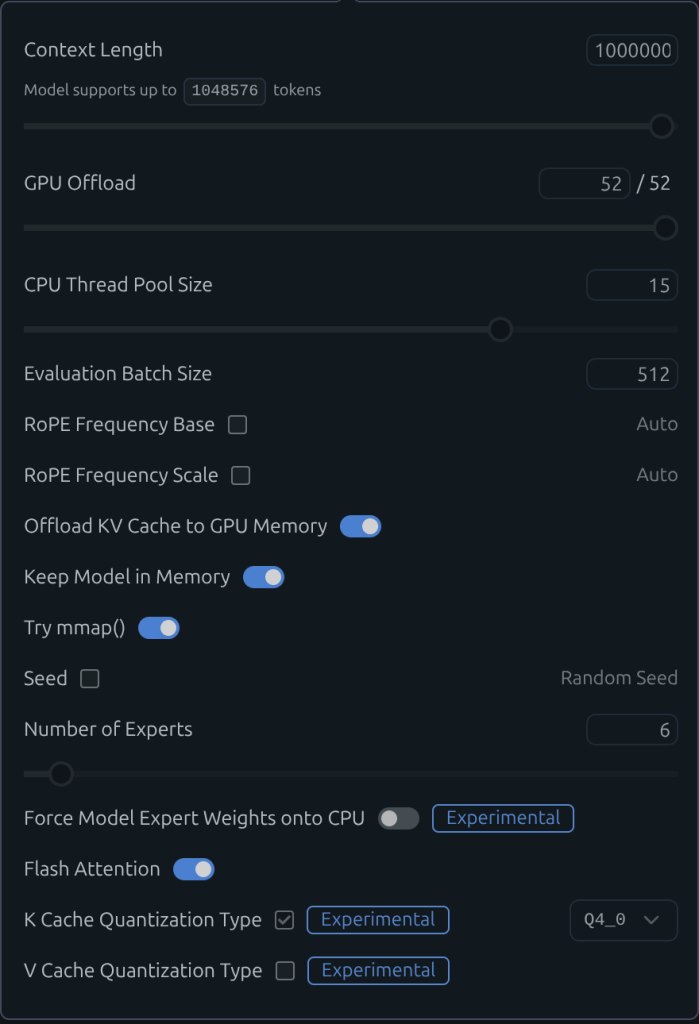

This model is available in GGUF format, so it was easy enough to download into LM Studio and use. It’s important to set the LLM’s Context Length and to activate K Cache Quantization Type with Q4_0 quantization:

And a note about quantization as mentioned above: this refers to the reduction in precision of an LLM’s numerical weights (for example, from 16-bit floating point to four-bit) to improve speed and reduce model size. There is a purportedly small reduction in response accuracy as you go to lower precision. I went down to the four-bit model of Nemotron 3 Nano to get it to fit into my DGX Spark. I’ll have to at some point test it on higher precision LLM versions, to ensure that my models don’t hallucinate. It is worthy of note that ChatGPT uses full-precision internal weights and proprietary mixed-precision schemes that are not exposed to users; thereby in theory being less likely to hallucinate, and being more likely to successfully process the Intel SDM. But we can see from above that that’s not totally true.

So, I decided to jump in head-first on this model, and replicate what I had done with ChatGPT: upload the UEFI CryptoPkg and analyze it. But, I quickly ran into LM Studio’s 30MB file size upload limit: as mentioned above, the CryptoPkg folder is 65MB in size, so it wouldn’t fit. LM Studio will not allow direct uploads of .zip files like ChatGPT does. And I also had to take into account the 1M token context limit: since ChatGPT is a “black box”, I needed beforehand to determine if a “compressed” version of CryptoPkg fit into the 1M context supported by Nemotron 3 Nano. And that’s when my researches took me to the Repomix application.

Repomix promised to compress and pack the codebase into a single-file, AI-friendly format. And it provides an approximate token count using GPT-4 encoding, to give a rough idea of whether the model would accept it. It’s a slick tool, with a lot of options to include/exclude files/directories, clean out blank and comment lines, etc.

But, to keep a long story short, it works, but not well enough, based on my testing so far. You can see my console session below:

alan@spark-630a:~/Downloads/CryptoPkg$ repomix --style plain --output CryptoRemoveSSLHeaders2 --remove-comments --remove-empty-lines --compress

📦 Repomix v1.11.0

✔ Packing completed successfully!

📈 Top 5 Files by Token Count:

──────────────────────────────

1. repomix-output.txt (20,156,609 tokens, 46,594,349 chars, 42%)

2. CommentsEmptylines.xml (18,664,169 tokens, 42,233,057 chars, 38.9%)

3. Library/OpensslLib/openssl/test/recipes/10-test_bn_data/bngcd.txt (1,141,149 tokens, 2,393,695 chars, 2.4%)

4. Library/OpensslLib/openssl/test/recipes/30-test_evp_data/evpccmcavs.txt (455,761 tokens, 884,572 chars, 0.9%)

5. DirectoryRemoveSSLHeaders (411,850 tokens, 889,359 chars, 0.9%)

📊 Pack Summary:

────────────────

Total Files: 3,556 files

Total Tokens: 48,021,814 tokens

Total Chars: 111,568,570 chars

Output: CryptoRemoveSSLHeaders2

Security: 93 suspicious file(s) detected and excluded

🎉 All Done!

Your repository has been successfully packed.

I could not get down to reasonable file and context sizes. And also, after taking advantage of .repomixignore and using Custom Instruction repomix.config.json and repomix-instruction.md files, I could still only get down to 48 million tokens! UEFI ‘C’ code is extremely dense, and the OpensslLib has a notoriously deep and repetitive directory structure. I was running at 42 tokens per line just for the directory listing (which itself took up 888kB)! I was still blowing away my available context limit, even at one million tokens; and also, I could only get the .xml or .md file size down to ~42MB, which was still far too large for LM Studio’s baked-in 30MB limit.

So, what’s next? It’s clear that LM Studio’s 30MB upload file size limit is too restrictive, and you can perhaps overcome that using AnythingLLM. Maybe I should take another pass at Open WebUI? And Gitingtest looks like a possibility as well. I’ve also had a quick look at (but I’ve not yet tried out) the “Big RAG” LM Studio plugin that purportedly can index and search through gigabytes or even terabytes (not yet tested) of data. There are many other emerging related technologies that look like good avenues for exploration:

- vLLM + LMCache + GDS – Offloading KV cache to memory other than VRAM may support larger context windows

- TensorRT-LLM – Optimizes inference engines, and supports KV caching

- FlexGen – Another approach to aggregating memory from GPU, CPU, and mass storage

- Paged Attention over RDMA – Arthur Rasmusson’s seminal paper/video on offloading cold KV data to NVMe or distributed filesystems

- LongCodeZip – A code compression framework designed specifically for code LLMs.

The fascinating thing about this industry is that everything is moving so fast – new AI innovations seem to be coming out every day that move us closer to infinite context with unlimited accuracy – it is certainly an exciting time.

And I still have on the to-do list the need to fine-tune my model with the Intel SDM. Stay tuned for Part 3!